User experience matters, and JavaScript (JS) is a valuable technology used to create dynamic and interactive user experiences on the web.

But that’s where the problems arise: Search engines and their crawlers are a bit less fond of JavaScript.

While Google and some other search engines can execute and index content that uses JavaScript, it still has its challenges, and many crawlers struggle with it. This is a particular problem for AI crawlers, as most are unable to properly process JavaScript.

This means that if your website dynamically loads content via JavaScript, this can seriously impact your content’s visibility – both in traditional organic search and in increasingly popular AI-powered search results.

This guide explores the challenges of using JavaScript on your website and shares SEO best practices to help you overcome them. We’ll also show you how Seobility can help you analyze and optimize your website if it uses JS.

If you’re not 100% sure what JavaScript is and how it works, we recommend reading our JavaScript wiki article before continuing with this guide.

What’s so special about JavaScript SEO?

When JavaScript is used to dynamically load and display content, this is referred to as client-side rendering (CSR). That’s because the client device (browser) must process JavaScript content to render (as in, process and display) the page on the user’s device. This contrasts with server-side rendering, where the website’s server generates pre-rendered HTML for the browser – this means everything is already prepared for the user, and easier for any tool that doesn’t use JavaScript to decide what to display.

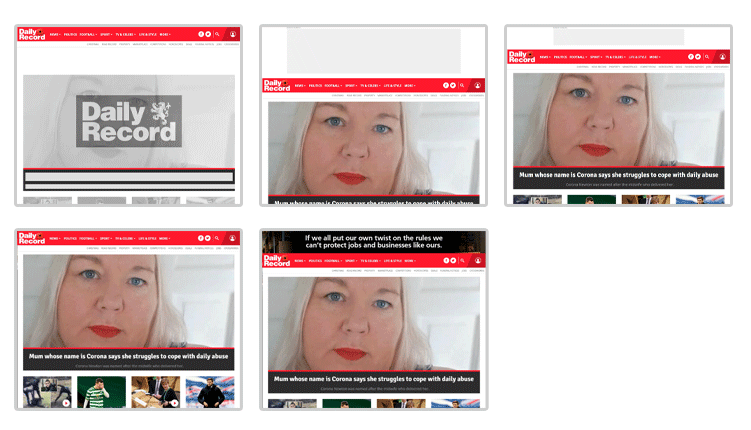

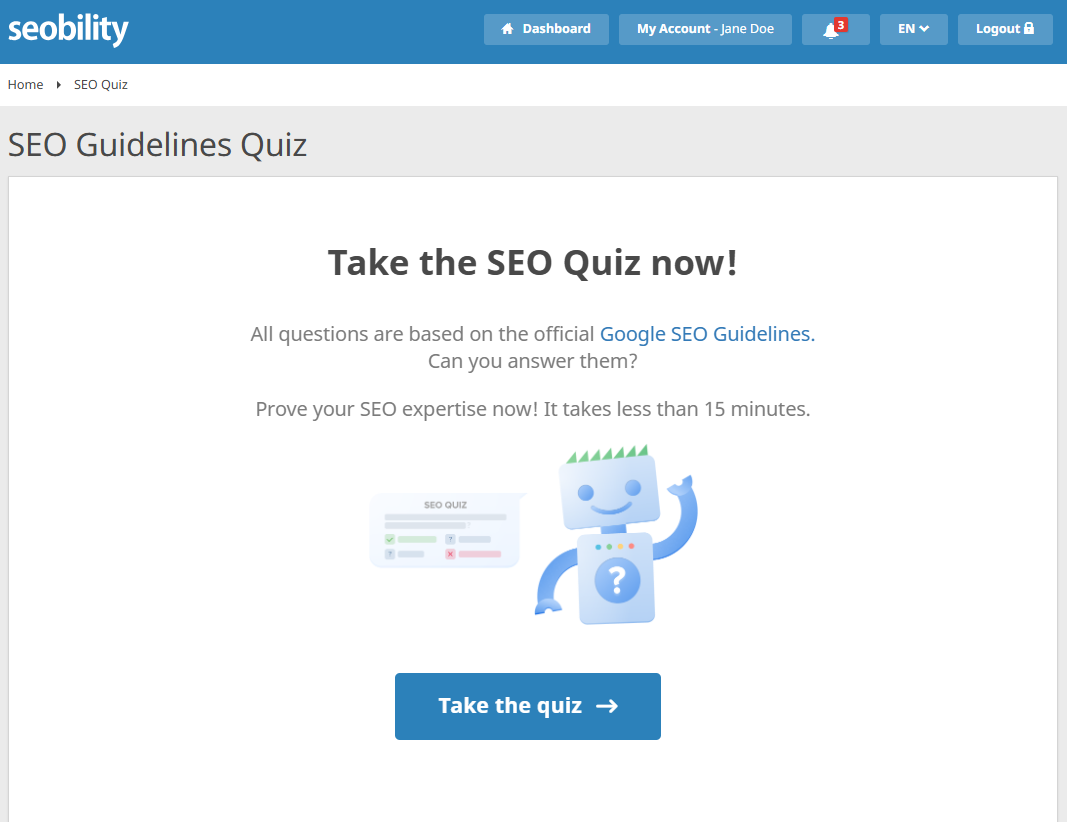

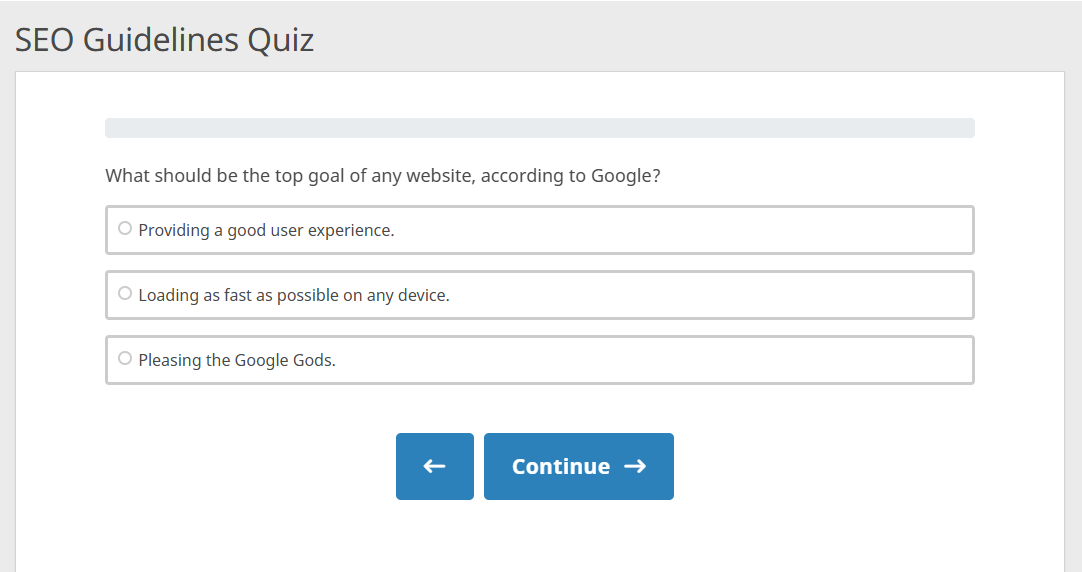

JavaScript is great for creating dynamic content – for example, quizzes, like the example shown below:

While users might enjoy and share it, only the static content displayed without JavaScript is likely to be crawled. This means that crawlers probably won’t see the content that is displayed after clicking the “Take the quiz” button:

That’s fine for a little quiz, but what about more important content?

If we want to rank well, we need our content to be ready and readable by search engine crawlers – even if we’re using JavaScript and client-side rendering. While some senior Googlers have urged caution around JavaScript, Googlebot is capable of rendering JS content and Bingbot is not far behind, but other search engines and AI crawlers often struggle to process any content delivered via JavaScript.

In a nutshell, resolving the client-side rendering challenge generally means optimizing your JavaScript and ensuring critical content is always prerendered by the server without relying on JavaScript. That ensures Google and other crawlers don’t miss anything essential. We’ll explore this in more detail later on.

| Client-side rendering (CSR) | Server-side rendering (SSR) | |

| How is the content displayed (rendering) | Uses the visitor’s device resources to render content using JavaScript. | Generated on the server before delivery as pre-rendered HTML. |

| Why people like it | Dynamic and interactive websites, tailored user experiences. | Faster page loads, easier indexing, better technical SEO. |

| Crawling | Search engines and important crawlers may be unable to see dynamically loaded content. | Immediately available for indexing by all search engines and important crawlers. |

Challenges of JavaScript in SEO

As you probably expected, the fact JavaScript SEO is even a thing (there is no such thing as PHP SEO, is there?) does suggest there will be challenges. These are some of the big ones.

Incomplete crawling and indexing

If a search engine can’t properly read JavaScript-based content, it won’t show up in search results. This can happen when information such as product details, reviews, or prices are loaded from external services (for example, a reviews or stock management plugin that might be pulling data from another website or company systems). Because this content appears after the page has loaded, search engines might not ‘see’ or be able to process it. They may even be blocked from accessing it altogether.

JavaScript can also create many versions of the same page, with slightly different URLs, based on user sessions. This might happen when tracking codes or filters are added to the URL. This can cause duplicate content issues, although this can be resolved with canonical tags.

AI crawlers are unable to execute JavaScript

A study by Vercel on AI crawlers revealed that many current leading AI crawlers – ChatGPT, Claude, Meta, Bytespider, and Perplexity – cannot execute JavaScript at all. This creates a big difference between Google’s crawling capabilities and those of AI systems: while Google has invested heavily in JavaScript rendering, most AI crawlers only capture the initial HTML response and do not process any JavaScript dependent content.

This means that JavaScript-rendered content might be completely invisible to AI systems, even when it ranks well in traditional search engines.

We don’t know yet if Gartner’s prediction about LLMs replacing traditional search will come true. But we all know someone who uses ChatGPT or Claude like a search engine. If you want to appear in those LLM-based search results, it’s important to ensure your content can be accessed by them.

Many JavaScript frameworks are not SEO-friendly

Some popular JavaScript frameworks used in web development such as React, Angular, or Vue can load new content without fully reloading the page. For example, it might be used to display a company’s home, about, and privacy policies all on the same URL, without the URL in the address bar ever changing.

This is called routing, and it changes the page state – as in, what’s shown on screen – without changing the whole webpage and its address.

This can cause problems for search engines, though, as they generally expect a unique URL for each page. If the content changes but the URL stays the same, it’s possible that parts of the site might not get indexed.

JavaScript impacts performance and sustainability

Multiple, large, or poorly optimized JavaScript files can be render-blocking. That means that the page cannot be properly displayed until the JS and any associated elements have been fully downloaded and processed by the user’s device. This increases page load (and display) times.

It can also trigger an ugly repaint effect known as a cumulative layout shift (CLS) – where the content has to be ‘redesigned’ live due to late-loading content. A classic example of this sort of repaint we have all seen is how an eCommerce website might first load, and then a few seconds later a banner with the latest offers is inserted at the top, rearranging the rest of the content below. This can have a significant negative effect on performance and user experience – known ranking factors.

An example of cumulative layout shift: the layout of this page changes several times as it loads

Meanwhile, large file transfers and client-side rendering significantly increase a website’s carbon footprint. With increasing awareness of digital carbon and the pending launch of the W3C Web Sustainability Guidelines, it might be time to look closer at the size and impact of your JavaScript rendering.

Problems with tracking and analytics

Tools such as Google Analytics are themselves often JavaScript-based. This means they, in turn, can have issues with JavaScript-heavy websites. If their scripts fail to load or are significantly delayed behind the rest, interactions may not be properly tracked – reducing the quality of your analytics data.

Structured data and other enhancements might not be recognized

Some SEO and internationalization tools rely on JavaScript to insert schema markup (structured data) and localized content, particularly of alt text, but also sometimes meta tags and page content. Reliance on JavaScript to insert important content dynamically is risky and can result in missing your opportunity to appear in rich snippet results, or the wrong content being picked up by search engines.

Best practices for JavaScript SEO

You’ve read the (many) challenges. But before you panic, it’s worth remembering that not all JavaScript comes with the same challenges, and even then, there are usually good ways to address them for users and SEO alike.

Avoid using JavaScript when it doesn’t add value

JavaScript isn’t bad – it’s often the most effective way of adding or managing certain accessibility features, such as dark mode or responsive menus! But it’s worth considering whether the content you’re planning actually needs to be delivered in JavaScript. Perhaps there is another way to handle it – one that wouldn’t hide essential content from crawlers? Before you go any further with your optimizations, you should make sure any content that will always be displayed is provided without relying on JavaScript.

Render important content without JavaScript

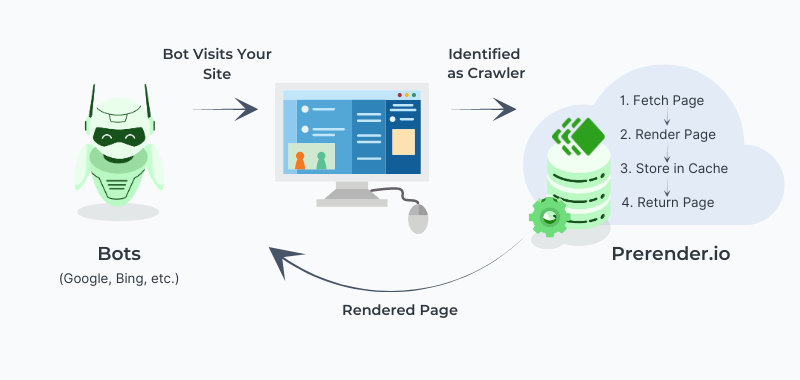

Ensure critical content, such as above-the-fold content, meta data, images, links, and schema markup are accessible to search engines without JavaScript. Server-side rendering (SSR), also known as pre-rendering or static site generation (SSG), means that the page is fully built before being sent to the browser. For JavaScript-heavy sites where full SSR or SSG approaches aren’t practical, dynamic rendering can be an effective compromise. It shows a pre-rendered version to search engines and other crawlers, while giving human visitors the full interactive experience with JavaScript. Some frameworks, such as Next.js or Nuxt.js, support this dual setup natively, while tools for other JavaScript frameworks also exist.

One tool for this purpose is Prerender, which automatically creates ready-to-index versions of your pages. You can read more about pre-rendering there.

How pre-rendering works (Source)

The idea is to ensure your critical content is always available and ready to be indexed, even if JavaScript arrives late to the rendering rendezvous.

Use SEO-friendly lazy loading and progressive enhancement

Design your website in a way that ensures basic content can always load, even if JavaScript fails or that and other assets take a while to load. Progressive enhancement is about providing something functional in static HTML that loads and meets key objectives without JavaScript, then using JavaScript to add interactive features on top.

For example, a blog post can be designed to show text and images first, while extra features such as an interactive comment section can be displayed after, once JavaScript is available. This ensures your core content is accessible and indexable, even if JavaScript doesn’t load properly.

In a similar vein, lazy loading is a great technique to improve page speed by only loading images or other assets when needed. However, search engines may not always index that content properly if using JavaScript-based lazy loading. Be sure to:

- Use the HTML attribute

loading="lazy"for images - Don’t hide content with

display: none or visibility: hidden - Always include a placeholder image (like

src="fallback.webp")

This ensures search engines can access and understand your content, even before JavaScript loads.

Optimize JavaScript for performance and sustainability

Large scripts can slow down pages, hurting SEO and user experience, while increasing your digital carbon footprint. Fortunately, there are ways to minimize this impact:

- A good place to start is by minifying and compressing your JavaScript files to reduce their size and load time. Many caching and optimization tools include automatic code minification while enabling GZIP compression for faster, smaller code. There are also minification tools online for any other code.

- You can also use async and defer to prioritize loading other content first. Many optimization plugins can also take care of this.

- But you should also check that your JavaScript isn’t render-blocking, as this can affect your Core Web Vitals.

Sounds complicated? Don’t worry – our article on page speed optimization will guide you through the implementation of these steps!

You can also use Chrome dev tools to check coverage, as in, how much code is actually used on the page. That will help you to find unused JS elements that unnecessarily slow down your website.

More technically inclined people can also consider code splitting – breaking larger files or libraries into smaller, manageable chunks that load on demand.

Developers can also consider whether vanilla JavaScript (JavaScript that does not rely on existing premade functions, known as libraries) or a more streamlined library may be more efficient than using features dependent on larger libraries.

Monitor for JavaScript errors and correct rendering

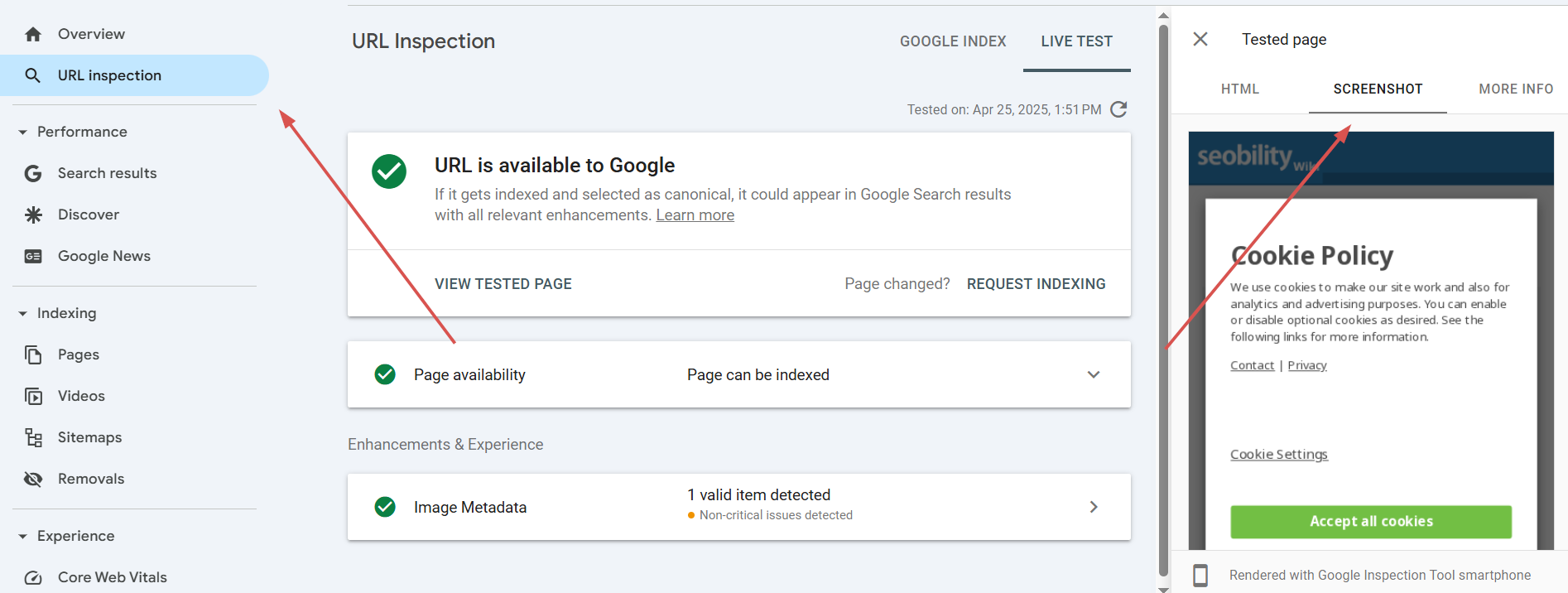

JavaScript errors can break your pages and prevent indexing. On the technical side, Google Search Console and Chrome dev tools can help you to identify errors and debug JavaScript.

It’s also important to make sure that your JS-based content is rendered correctly by crawlers. However, it can be hard to see what a crawler will see by yourself. Google Search Console can provide some hints in its URL Inspection tool. This tool lets you inspect any individual page to be sure it is being rendered correctly by Google. See below for an example.

Nevertheless, sometimes it helps to get a bit more detailed information with a little more support and context: That’s where Seobility’s ability to crawl websites with content provided dynamically via JavaScript can help.

How Seobility can guide you on your JavaScript SEO journey

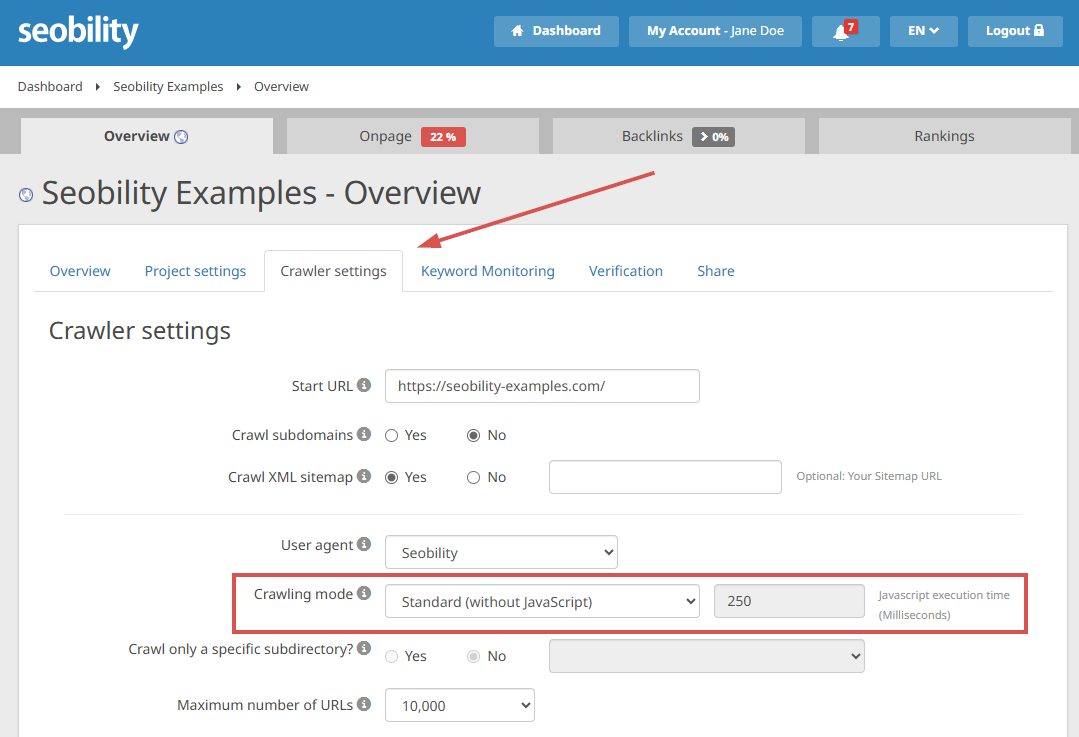

Seobility’s Website Audit has two crawling modes – the standard mode without JavaScript, and Chrome (JavaScript-enabled) mode.

To activate JavaScript crawling, go to Overview > Crawler Settings for your project.

JavaScript crawling mode is essential if working with JavaScript-heavy sites: It allows analysis of the generated code rather than just the original HTML and the ‘instructions’ in the JavaScript. This gives you better insight into what JavaScript-capable search engines actually see, although it’s still not a guarantee.

You can also set the JavaScript execution time to simulate the ‘patience’ of crawlers. This allows you to see exactly what search engines might or might not detect.

Please note that JavaScript-enabled crawls take significantly longer than standard HTML-based crawls.

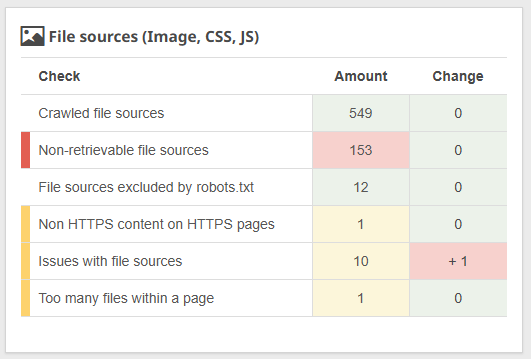

The File Sources Report, found under On-page > Tech & Meta can also provide useful insights, and highlight if there are problems with your JavaScript files.

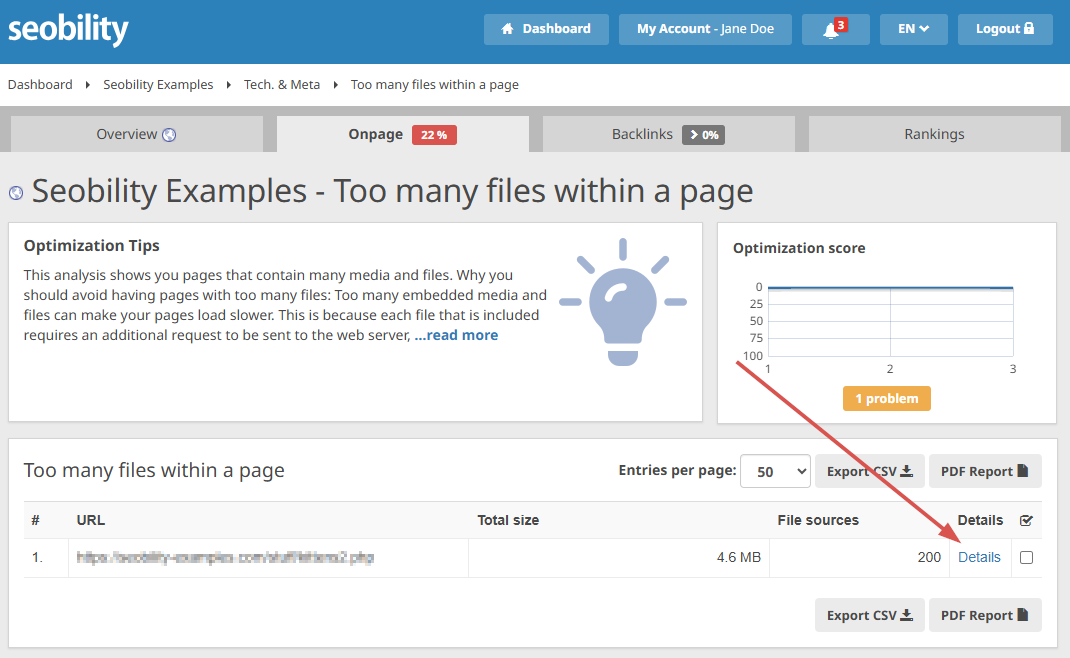

The File Sources Report highlights any problems relating to files used on your website, including JavaScript files. For example, when there are simply too many JavaScript files, or these files are large and affecting performance.

JavaScript issues can also show up in Seobility’s analysis in other ways, though:

- Missing meta data, alt attributes, or something else you swear you included? – This can happen when images or critical content are injected via JavaScript.

- Unrecognized lazy-loaded images? – Critical images shouldn’t be lazy loaded, otherwise crawlers – including Seobility’s own – might overlook them!

- Too many JavaScript files, but you never knew they were there? – Click on Details alongside the report to find the URLs – so you can start tracking them down.

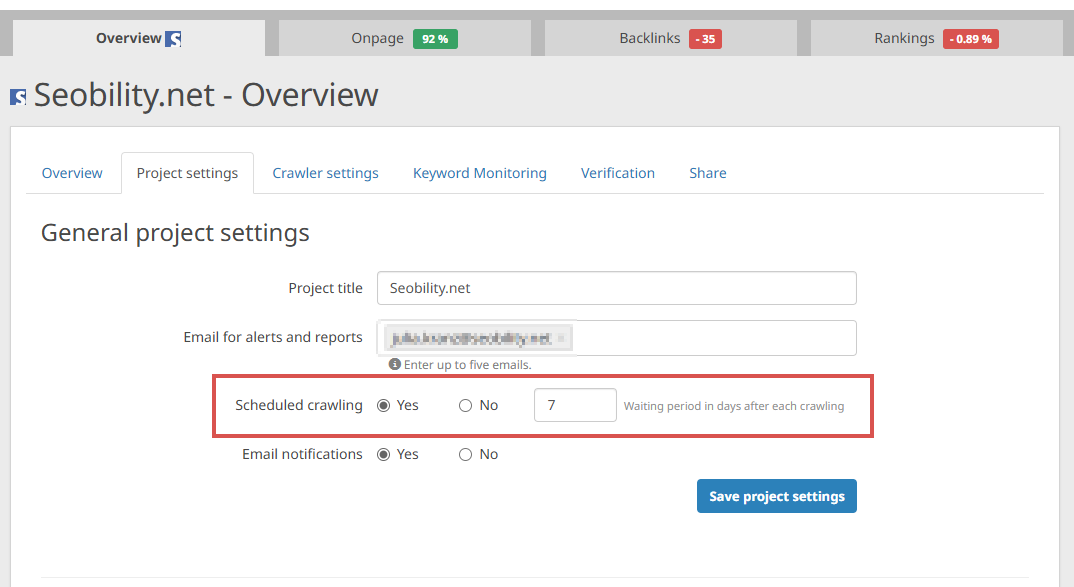

You can also set up Seobility to recrawl your site periodically:

Overview > Project settings

This allows you to track your progress optimizing SEO on your JavaScript-rich website – or act fast when something goes wrong!

JavaScript SEO: Not necessarily a contradiction

Yes, dynamic content injected by JavaScript poses a few challenges for SEO, affecting the way search engines crawl, render, and index content. But best practices such as server-side rendering (SSR), progressive enhancement, lazy loading, optimizing your JavaScript, and carefully monitoring for errors can improve how search engines process your content.

While minimizing JavaScript and dynamic content injection all round is probably going to save you a lot of headaches (and carbon), many of these best practices apply to more than just JavaScript. At the same time, don’t let the fiddly bits put you off: JavaScript is almost essential if you want to provide a responsive, accessible user experience, and it can be optimized for SEO.

Experience JavaScript-enabled SEO crawling for yourself with Seobility – sign up for a free 14-day trial right now. What are you waiting for? Run, don’t crawl! (Sorry.)