The World Wide Web has become a huge mass of information in recent decades and is still growing rapidly. For search engines like Google, it is impossible to fully capture this ever-growing number of web pages which is why they only crawl a certain number of URLs per website. This is commonly referred to as crawl budget. In this article, you will learn why this concept is so important and how to optimize the crawl budget of your website. At the end of the article, you will also find a comprehensive infographic that summarizes the most important points.

Table of Contents

Why is crawl budget so important?

Before your site can appear in the search results, it first has to be crawled and indexed by Googlebot. Efficient crawling is essential if you want all your important pages to be added to Google’s index. Therefore you have to properly direct Googlebot through your website so that it can find and crawl all important, high-quality content. This so-called “crawl optimization” has become an important part of search engine optimization.

Crawl optimization is especially relevant for large websites with many subpages (e.g., online shops). Due to the high number of URLs, there’s the danger that Google spends too much time on irrelevant areas of these websites, stops crawling and thus can’t find the important pages. Small and medium-sized websites with a few thousand URLs usually do not have to worry. Google can easily capture all subpages there and include them in its index.

Defining crawl budget

Before you start optimizing the crawl budget of your website, you have to understand what the term really means. Google published a detailed article about this topic on its Webmaster Central blog. The most important aspects are summarized below:

Basically, the term “crawl budget” includes two concepts: crawl rate & crawl demand.

Crawl Rate refers to the number of simultaneous parallel connections that Googlebot can use to crawl your website. It depends on how fast your server responds and whether there are technical errors.

Crawl Demand describes how important your website is to Google and depends on the popularity of your site. For example, if your site has few backlinks or outdated content, Google may find it less interesting and not use the maximum crawl rate that would be technically possible.

In summary, the term crawl budget thus describes the number of URLs on your website that Googlebot can crawl from a technical point of view and also wants to crawl from a content perspective.

Factors that negatively impact crawl budget

Your website’s crawling and indexing can be negatively affected by various factors such as:

-

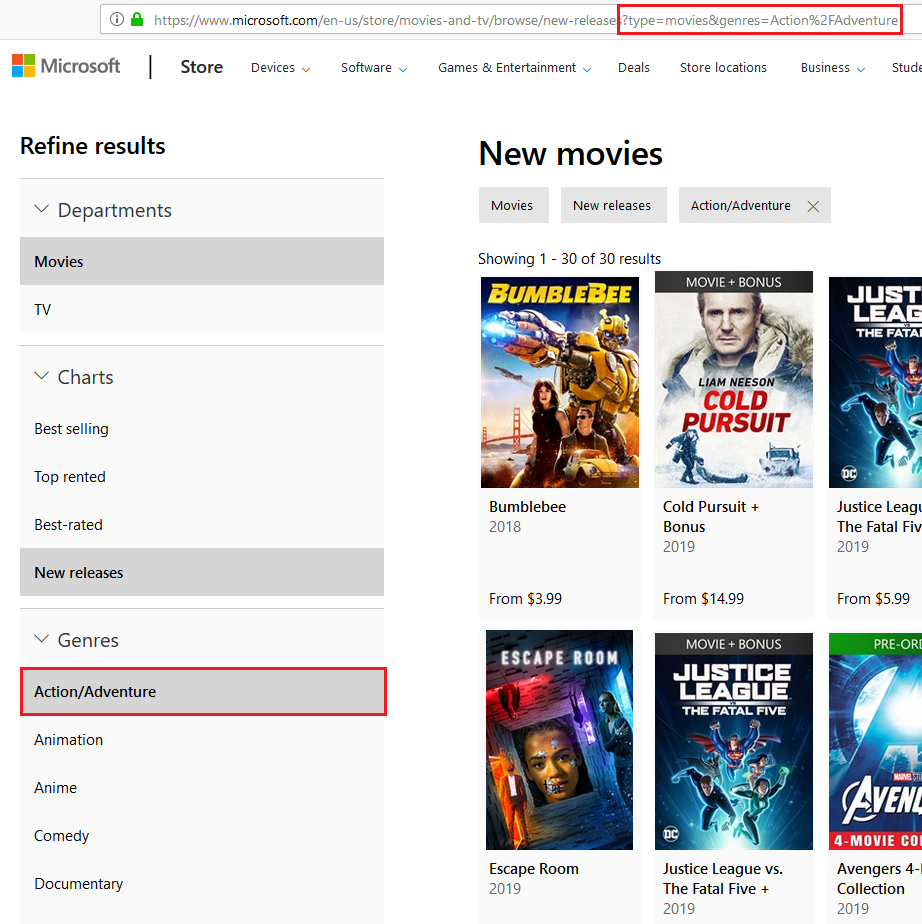

Faceted Navigation: This type of navigation is often used in online shops. It enables users to limit results on a product category page based on criteria such as color or size. For this purpose, URL parameters are added to the respective URL:

Screenshot of microsoft.com

In the example above, the results are restricted to the Action/Adventure genre by adding the parameter &genres=Action%2FAdventure to the URL. This creates a new URL that wastes crawl budget because the page does not provide any new content but only shows an extract of the original page.

-

Session IDs in URLs: Session IDs should no longer be used as they create a massive amount of URLs for the same content.

-

Duplicate Content: If multiple pages have the same content, Google has to crawl many URLs without finding any new content. Thereby resources are wasted that could be used for relevant and unique content on other pages.

-

Soft Error Pages: These are pages that can be retrieved (as opposed to 404 error pages) but do not provide the content you want. The server returns the status code 200, but can not access the actual page content.

-

Hacked Pages: Google always wants to provide its users with the best results possible, and manipulated pages are definitely not one of them. Therefore, if Google detects such pages on your website, it stops crawling.

-

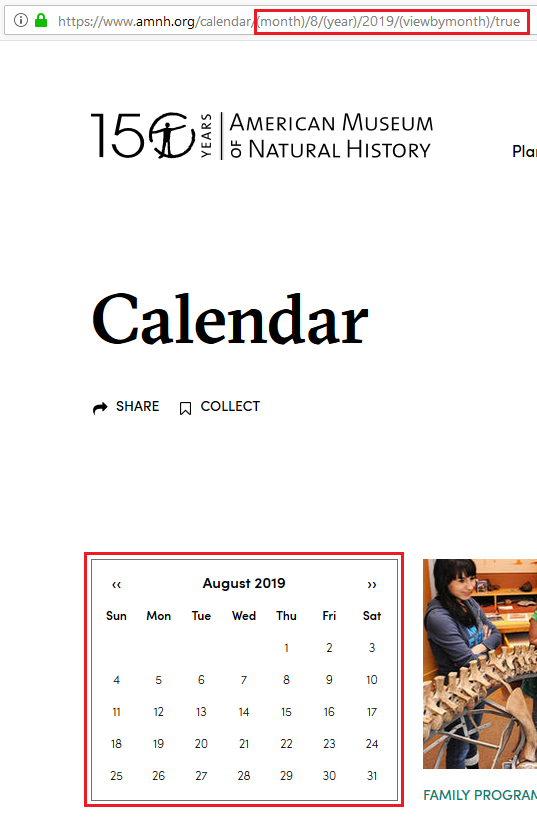

Infinite Spaces: Infinite spaces can arise, for example, if there’s a calendar with a “next month” link on your site as Google can keep following this link forever:

Screenshot of amnh.org

As you can see in this example, each click on the right arrow creates a new URL. Google could follow this link forever which would create a huge mass of URLs that Google has to retrieve one by one.

-

Content of low quality (thin content) or spam content: Google doesn’t want to have such pages in its index and therefore stops crawling when such content appears on your site.

-

Redirect chains: If you set a link to a page that redirects to another page, Googlebot has to call multiple URLs until it gets to the actual content. Google does not follow the redirects immediately but treats them as individual pages, which wastes crawl budget.

-

Cache Buster: Sometimes resources, such as CSS files, have names like style.css?2345345. The parameter that’s added with a question mark is generated dynamically each time the file is called in order to prevent the file from being cached by web browsers. However, this also leads to Google finding a high number of such URLs and retrieving them individually.

-

Missing redirects: If general redirects for www. and non-www, as well as http and https, are not set up correctly, this will cause the number of URLs that can be crawled to double or even quadruple. For example, the page www.example.com would be available under four URLs if no redirect was configured:

http://www.example.com

https://www.example.com

http://example.com

https://example.com -

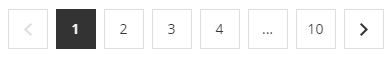

Poor pagination: If you have pagination where there are only buttons to click back and forth (i.e. no page numbers), Google has to retrieve a large number of pages in order to capture the pagination completely. Therefore, you should use pagination like this instead:

Screenshot of zaful.com

Here, Googlebot can directly navigate from page 1 to the last page and get a quick overview of all pages that have to be crawled.

If one or more of the factors described above apply to your website, you should definitely perform crawl budget optimization. Otherwise, there’s the danger that Googlebot won’t index pages on your website that offer high-quality content because it’s too busy crawling irrelevant pages.

How to increase and efficiently use crawl budget

Crawl budget optimization usually has two objectives: first, to increase the overall crawl budget available and, second, to use the existing crawl budget in a more efficient way. Below we show you possible strategies for both approaches.

How to increase your crawl budget

-

Improve server response time and page-speed: If your server responds quickly to page requests, Googlebot can use multiple connections simultaneously to crawl your site. This increases crawl rate and thus overall available crawl budget. Optimizing the page speed of your website is an essential measure anyway, as page speed is an important ranking factor. In our wiki, you will find a detailed guide on how to improve page speed. The most important aspect in the context of crawl budget optimization is to minimize the number of HTTP requests that have to be sent to a server in order to load a page. This also includes requests for resource files such as CSS or JavaScript.

-

Reduce server errors: Make sure that your technical infrastructure is free of errors so that Google can retrieve as many URLs as possible from your web server. Also, ensure that all pages on your website return status code 200 (OK) or 301 (permanent redirect).

-

Increase crawl demand: To make Google visit your website as often as possible, you need to make your site interesting. This is possible, for example, by building backlinks. Backlinks signal to Google that your site is recommended by other sites and therefore most likely delivers good content. Since Google always wants to keep such pages up to date in its index, Googlebot visits pages with many backlinks more frequently.

Another way to increase crawl demand is to increase user engagement with your content as well as the time visitors spend on your site. Likewise, a high level of social sharing shows that your website offers content that is worth sharing. In addition, to make Google visit your site, you should publish fresh content on a regular basis.

How to use your crawl budget more efficiently

Although you can increase your crawl budget to some extent, it is equally important to use your existing crawl budget in an efficient way. This is possible through the following strategies:

-

Exclude irrelevant content from crawling: In a first step, you should exclude all pages from crawling that are irrelevant for search engines, e.g. by using a robots.txt file. These could be log-in pages, contact forms, URL parameters, and the like. In our wiki article, you will find a detailed description of the functionality and possible instructions in a robots.txt file.

But be aware: If pages are excluded from crawling via robots.txt, that also means that Google can no longer find important meta information such as canonical tags or noindex directives on these pages.

-

Using nofollow: Although many website owners don’t want to use nofollow for internal links, this is also a possibility to keep Googlebot away from irrelevant pages. For example, nofollow can be used in the above calendar example for the “next month” link to avoid infinite spaces. However, you also lose important link equity with this method. Therefore, you have to evaluate if you want to use robots.txt or nofollow for each individual case.

-

Avoid URL parameters: URL parameters should always be avoided. If this is not possible, you should exclude them from crawling in your robots.txt, provided that they are not needed to retrieve relevant content.

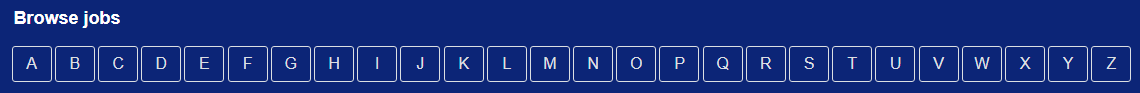

-

Flat site architecture: With a flat site architecture you can ensure that all subpages of your website are just a few clicks away from your homepage. This is not only great for users but also for search engine bots. Otherwise, there’s the danger that crawlers don’t visit pages of your website that are located too deep in your site hierarchy. The easiest way to implement a flat site architecture is to use an HTML sitemap that lists all important subpages of your website. If all the links don’t fit on one HTML page, you can distribute your sitemap into several hierarchies and sort them alphabetically, for example:

Screenshot of stepstone.de

-

Optimize internal linking: Search engine bots follow internal links to navigate through your website. Therefore, you can use your internal linking to direct crawlers to specific pages. For example, you can link pages that should be crawled more often on pages that already have many backlinks and that are visited more frequently by Googlebot. You should also set many internal links to important subpages to signal their relevance to Google. In addition, such pages should not be too far away from your homepage. You can find more information on how to optimize your website’s internal links here.

-

Provide an XML Sitemap: Use an XML Sitemap to help Google get an overview of your website. This speeds up crawling and reduces the likelihood that high-quality and important content will go undetected.

-

Don’t set links on redirects: Both internal and external links that lead to your website should never refer to a redirecting URL. Unlike web browsers, Googlebot does not follow redirects immediately, but step by step. So if a server returns a URL to Googlebot, the bot stores that URL and visits it later. This increases the risk of losing important content in the mass of URLs to be crawled.

-

Avoid redirect chains: As explained above, redirect chains increase the number of URLs that have to be requested from the server and thus waste crawl budget. For this reason, you should always redirect directly to the destination URL.

-

Check your content regularly: If your website has a lot of subpages, you should regularly review your content and merge or delete it where appropriate. This will help to avoid duplicate content and to ensure that each subpage provides relevant and unique content.

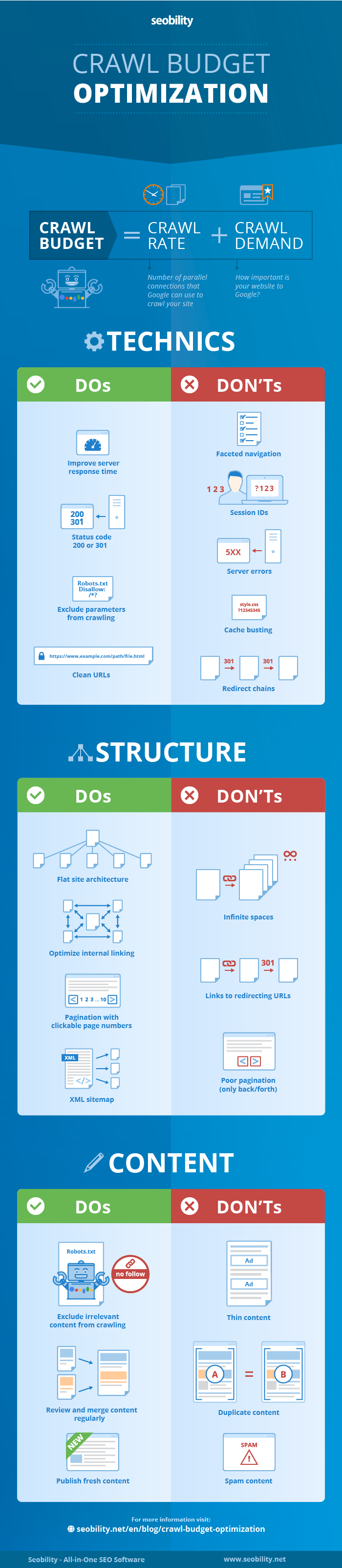

Infographic “Crawl Budget Optimization”

As you can see, there are a lot of points you have to consider for crawl budget optimization. To help you get an overview of the topic, we summarized the most important dos and don’ts in the following infographic:

Monitoring tips

Like many other tasks in SEO, crawl budget optimization can’t be finished in one day. It requires regular monitoring and fine-tuning to ensure that Google only visits important pages on your website. Hence, we would like to give you some tips and tools for monitoring your success.

-

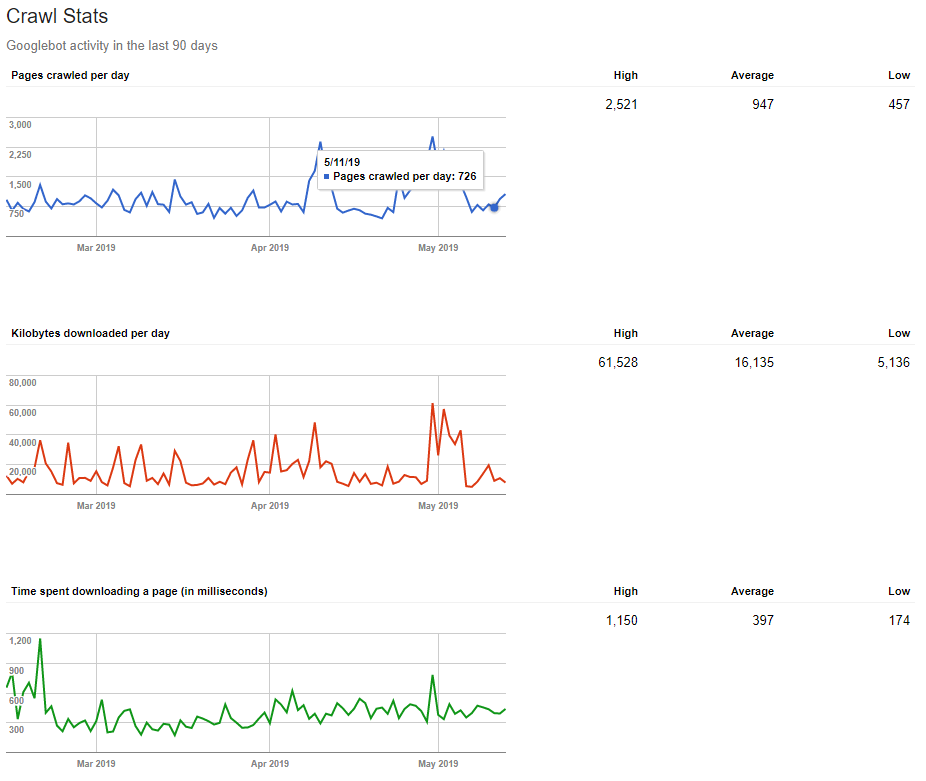

Crawl stats in Google Search Console: In the “crawl stats” section in (old) Google Search Console, you can get the number of pages crawled per day on your website for the last 90 days:

Screenshot of google.com/webmasters/tools/crawl-stats

You can use these stats to check if your optimization measures actually lead to an increase in crawl budget.

-

Log file analysis: Log files are used by web servers to track every access to your website and store information such as IP address, user agent, etc. By analyzing your log files, you can find out which pages Googlebot crawls more frequently and check if these are the correct (i.e. relevant) ones. In addition, you can find out if there are pages that are very important from your point of view but are not visited by Google at all.

-

Index coverage report: This report in Search Console shows you errors that occurred while indexing your website (for example, server errors) and that are affecting crawling.

Screenshot of search.google.com/search-console/index

Conclusion

At first glance, the optimization measures described above may seem a bit daunting, but basically, it’s all about one thing: provide technically flawless functionality and a good structure on your website. This will ensure that search engines can quickly find important content on your website, thus creating the foundation for efficient crawling. Once you’ve done that, Google can focus on your high-quality content, not having to bother with bugs or irrelevant pages.

Pro tip: On-page crawlers like Seobility can support you to reduce the workload. They automatically crawl your website and help you find errors and optimization potentials.

PS: Get blog updates straight to your inbox!

8 thoughts on “Crawl Budget Optimization: How to improve the crawling of your website”

Very useful post. Thank you so much!

Crawling and indexing are two distinct things and this is commonly misunderstood in the SEO industry. Crawling means that Googlebot looks at all the content/code on the page and analyzes it. Indexing means that the page is eligible to show up in Google’s search results. No matter how fast your website’s load, or how detailed your content is, still, it doesn’t matter if it is not index on Google SERP.

Hey David, you’re right, it’s extremely important to differentiate between those two processes. Just because a page has been crawled that doesn’t mean that it will be added to Google’s index (e.g. if there’s a noindex directive in the HTML code). However, each page that you want Google to index also must have been crawled before. Therefore, we often talk about “crawling and indexing” in this article as these two processes are closely connected.

Super useful. Is there an SEO checklist you recommend for conserving crawl bandwidth. Looking to quick actions points in a spreadsheet

Hi Aayush, unfortunately, there’s no such checklist that we know of but you can use our infographic for this. The “Dos” on the left side summarize the most important action points you are looking for.

Great article by Seobility, I was wrong in my pagination concept instead of numeric I was using only arrow, so I corrected that. Thanks for that cool point. Minute changes can make big impact .

Hi Karan, you’re welcome! We are happy to read that our article helped you to optimize your website.

Hello

thanks for share your article , great article to learn and understand i was follow next all article and blog.

thanks.