On October 24th, 2019, the way Google Search works took a huge leap when Google introduced a new update called BERT. According to the official announcement, this is the biggest change to the Google Search algorithm not only in 2019 but in the past five years, affecting one in 10 search queries. On December 9, Google announced that the update is now rolling out to over 70 languages worldwide. Considering its big impact, it’s important to understand the way BERT works and how it changes Google Search. In this article, you will learn what BERT means for your SEO and which other opportunities it might bear for your website.

What is BERT?

BERT is not only the name of this Google update but also of Google’s new open-sourced technique for natural language processing (NLP) which helps computers understand human language. The special thing about BERT is that it can simultaneously process the words both before and after a specific term in a sentence (this is called “bidirectionality”), thus enabling a much more accurate interpretation of the context and meaning of that term. Google uses this technique in its search algorithm in order to better understand what users are actually searching for.

At the end of this article, we will provide a more detailed explanation of the technical foundations that will help you understand the way BERT and Google Search work. Do you want to learn more about the technical side before you read on? Click here!

Initially, BERT was only live for English search queries in the U.S. but on December 9, Google started rolling it out to more than 70 languages worldwide. Following Google’s official announcement, Bing stated that it had been using BERT since April and has now implemented it globally.

How does BERT improve Google search?

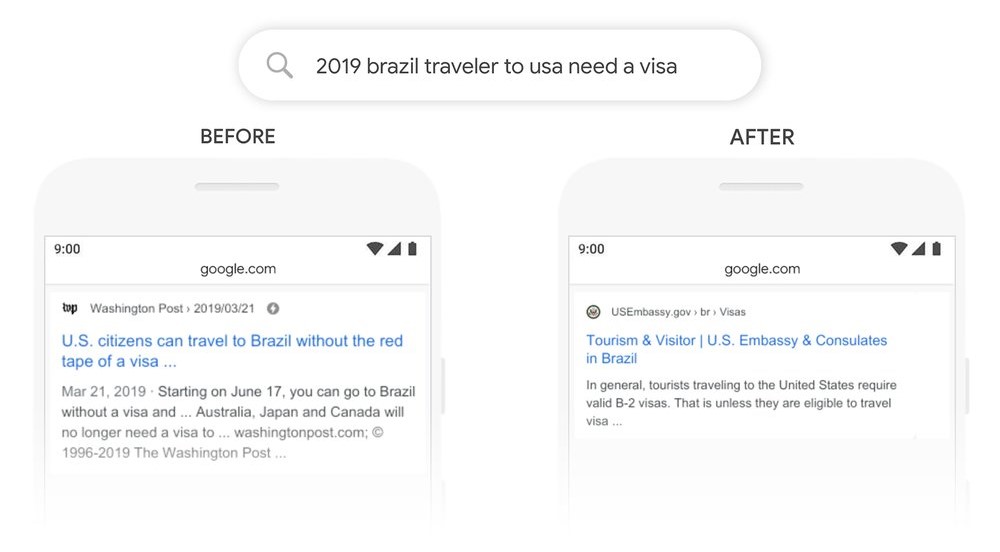

On their blog, Google states that BERT enables their search engine to understand longer, more conversational search queries. One example they give is the query “2019 brazil traveler to usa need a visa”. Before the BERT update, this would return results about U.S. citizens traveling to brazil. Thanks to BERT, the word “to” is recognized as important now and the results have changed, as you can see here:

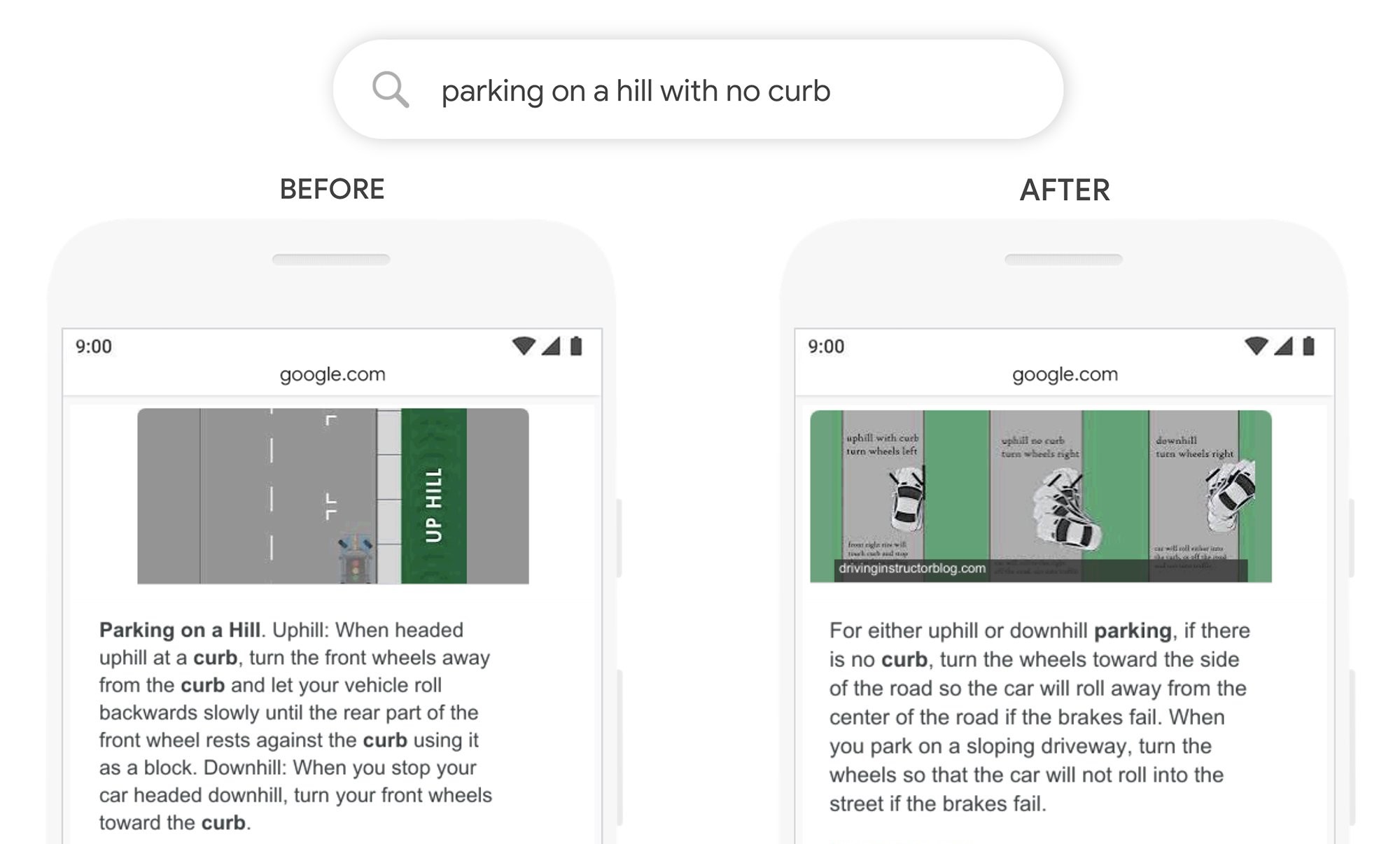

According to Google, BERT also improves the quality of featured snippets. Here’s an example:

Queries like this were hard to understand for Google’s algorithm before the update. At that time, it returned results on how to park on a hill with a curb.

As you can see above, BERT changes that as it understands how crucial the word “no” is in this sentence. As a result, the new featured snippet answers the user’s query much more accurately.

What BERT doesn’t do is judge websites or assign values to pages, as Danny Sullivan, Google’s public search liaison, stated on Twitter:

BERT doesn't assign values to pages. It's just a way for us to better understand language.

— Danny Sullivan (@dannysullivan) October 30, 2019

This means that it doesn’t change the ranking of websites. It just improves the algorithm’s understanding of natural language.

BERT and RankBrain

Google introduced RankBrain in 2015 for the same reason as it did with BERT in 2019: to understand what users are really searching for and deliver the most relevant search results. RankBrain analyzes users’ search queries as well as the content of web pages in order to understand the meaning behind the terms that users search for. This was a breakthrough for semantic search which is about understanding the searcher’s intent as well as the context of a query and the relationship between words.

The BERT update doesn’t render RankBrain obsolete, it simply supplements it. Google’s algorithm can decide which technique is best suited to interpret a specific search query and, based on this decision, apply one of them or even combine them.

BERT’s (unnoticed) impact on SEO

Google calls BERT a huge update and stated that it will impact 1 out of 10 search queries. However, the SEO community didn’t see any significant spikes or fluctuations in rankings since the release of the BERT update. The reason for this is that BERT mostly affects conversational search queries and long tail keywords where changed rankings often go unnoticed.

What does BERT mean for your SEO?

While this update is a big and important one that improves search results for many Google users, not much will change for SEO, as Danny Sullivan confirms:

There's nothing to optimize for with BERT, nor anything for anyone to be rethinking. The fundamentals of us seeking to reward great content remain unchanged.

— Danny Sullivan (@dannysullivan) October 28, 2019

So as you see, you can’t really optimize your website for BERT. Instead, great content will continue to be the most important SEO success factor you should focus on – this is even more important now than it already was. BERT is not about penalizing anyone, but about the searcher’s intent and understanding what users actually want. Just focus on creating high quality content for your target audience instead of writing SEO content for Google.

However, this doesn’t mean that BERT is completely irrelevant for website owners. In fact, the new NLP technology brings great opportunities such as:

- Training your own question answering system: Since BERT’s technology is open-sourced you can use it for training your own question answering system, e.g. for chatbots on your website or similar applications.

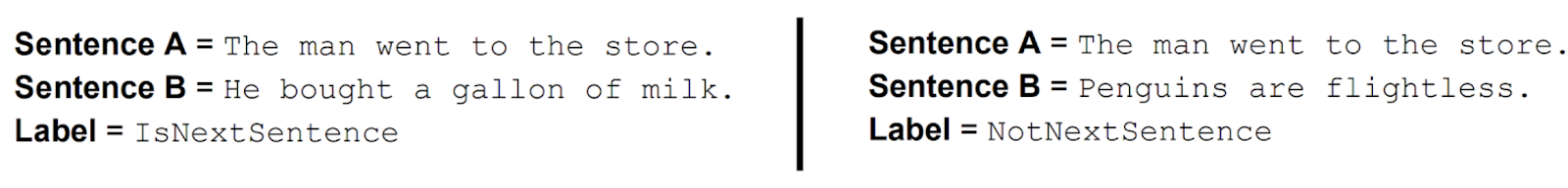

- Improving content quality: BERT can predict if sentence A is likely to be followed by sentence B (more on that below). This can be used to analyze if the content on your website follows a logical and coherent string.

- Higher quality of traffic: BERT enables Google to deliver search results that really match users’ needs. This could result in search traffic to your website that is of higher quality than before.

The technical foundations of BERT

BERT and Natural Language Processing

BERT is Google’s neural network-based technique for natural language processing (NLP) pre-training that was open-sourced last year. NLP is a type of artificial intelligence (AI) that helps computers understand human language and enables communication between machines and humans. Applications of NLP include translation services such as Google Translate or tools such as Grammarly that check written texts for grammatical errors. Another example are personal assistants such as Amazon’s Alexa or Apple’s Siri. They use Natural Language Understanding (a subtopic of NLP) to understand what you actually want to know or do when you’re talking to them.

NLP has always used a lot of different models such as classification or sentiment analysis to understand and process human language. BERT is a breakthrough for NLP since it combines eleven of the most important NLP models and thus enables to solve most NLP tasks with just one tool.

BERT in detail

BERT stands for “Bidirectional Encoder Representations from Transformers”. Sounds complicated but it’s actually pretty simple if you break it down from right to left:

BERT uses transformers, an attention mechanism that recognizes the contextual relation between words in a text. It consists of an encoder, which is fed with text input, and a decoder which predicts an output for the respective task. BERT only uses the transformer’s encoder since its task is to create a language model. That’s where the term “encoder representations” in the update’s name comes from.

Bidirectional simply means that text input isn’t read unidirectional (left-to-right or right-to-left) but the entire sequence of words is read at once so that the parts left and right of a target word can be used simultaneously to create context. Google explains the concept of bidirectionality on their blog:

“For example, the word ‘bank’ would have the same context-free representation in ‘bank account’ and ‘bank of the river’. Contextual models instead generate a representation of each word that is based on the other words in the sentence. For example, in the sentence ‘I accessed the bank account’, a unidirectional contextual model would represent ‘bank’ based on ‘I accessed the’ but not ‘account’. However, BERT represents ‘bank’ using both its previous and next context — ‘I accessed the … account’ — starting from the very bottom of a deep neural network, making it deeply bidirectional.”

This bidirectional approach is what makes BERT a groundbreaking innovation for Natural Language Processing. Looking at what comes both before and after a specific word helps to determine the exact meaning of that word in a sentence which has always been easy for humans but very difficult for machines without reasoning skills.

Training BERT

BERT’s bidirectional approach poses quite a challenge for training the model. Classic directional approaches usually predict the next word in a sentence by looking at the text sequence before this word, e.g. “The child came home from _____”. Since BERT wants to consider the whole context, it uses a training strategy called masking instead.

In the first training step, BERT is fed with complete texts that contain all relevant information. In the next step, single words in a sentence get replaced by a [mask] token. BERT looks at the words left and right of that token and tries to predict the missing word. This is done over and over until the prediction is correct.

Another important training strategy is Next Sentence Prediction (NSP), where BERT is fed pairs of sentences and learns to predict if the second sentence follows the first one in the original document. This is an important technique for better understanding the relationship between sentences in addition to the meaning of individual words and phrases. Here’s an example:

BERT’s weaknesses

While BERT is really smart and exciting, it still has its weaknesses. Allyson Ettinger from the University of Chicago published a research paper in which she explains some of the problems BERT still has. One thing she mentions is that “it shows clear failures with the meaning of negation.” Her example for this was the sentence “a Robin is a ___”. In this case, BERT managed to predict the word bird. When given the input “a Robin is not a ___”, it still predicted bird.

(If you have started this article with the technical foundations, you can jump back to how BERT improves Google Search here.)

Conclusion

BERT is a groundbreaking technology, not only for NLP in general but also for Google Search. However, it’s important to understand that BERT doesn’t change the way in which websites get ranked but simply improves Google’s understanding of natural language. For Google, this is an important step in reaching their ultimate goal: understanding exactly what users want in each and every situation.

If you want to dive deeper into BERT, make sure to check out the related articles below.

And for more information on how to optimize your website for Google search, we recommend you to read the other articles on this SEO blog as well as our SEO wiki.

Update (December 4, 2019)

Aligning with the attempt to understand users’ exact search intent, Google released the November 2019 Local Search Update shortly after BERT. With this update, Google started using neural matching for local search results in order to recognize users’ local search intent even if they don’t use the exact words of business names or descriptions.

The use of neural matching means that Google can do a better job going beyond the exact words in business name or description to understand conceptually how it might be related to the words searchers use and their intents…

— Google SearchLiaison (@searchliaison) December 2, 2019

Considering the rapid pace at which Google’s algorithm evolves, we are excited to see what the future holds for Google Search!

Related articles

https://towardsdatascience.com/bert-explained-state-of-the-art-language-model-for-nlp-f8b21a9b6270

https://www.blog.google/products/search/search-language-understanding-bert/

https://ai.googleblog.com/2018/11/open-sourcing-bert-state-of-art-pre.html

https://www.stateofdigital.com/how-bert-can-improve-the-quality-of-your-traffic/