What is a Large Language Model?

A Large Language Model (LLM) is an advanced computer program that has been trained to understand and create human-like text. It learns from huge amounts of text data (like books, websites, and articles) and can do many tasks involving language such as answering questions, writing text, or summarizing information.

Most LLMs today are built using a special type of AI technology called a transformer model, and they often have billions (or even trillions) of settings called parameters that help them understand language.

For example, the tool ChatGPT is based on an LLM:

How do LLMs work?

Transformers: The technology behind LLMs

LLMs use something called the transformer architecture, which was introduced in 2017.[1] This technology helps the model understand the meaning of words in a sentence by looking at how they relate to each other. It also allows the model to process large amounts of text quickly and accurately.

Scaling laws: Bigger models work better

Researchers have found that LLMs usually perform better when they are larger and trained with more data and computer power. This is known as a scaling law – the idea that bigger models with more training tend to be more accurate and useful.[2]

How are LLMs trained?

LLMs go through several steps during training:

- Pre-training: The model learns patterns in language by reading large amounts of text from the internet, books, code, and more. This step is self-supervised – no manual labeling – though the data is still curated and filtered by humans.

- Fine-tuning: The model is then trained on specific topics or tasks using examples and guidance from humans (e.g., writing code or understanding biology).

- Alignment: Final adjustments, most commonly RLHF (Reinforcement Learning from Human Feedback), are made to ensure the model’s responses are safe, helpful, and aligned with human values. This step can also use rule-based techniques.

Using LLMs more efficiently

Running an LLM can require a lot of computer power, and for very popular models the energy used during inference can eventually exceed the energy spent on training.[3] To make this more efficient, developers use techniques like:

- Quantization: Making the model smaller with minimal accuracy loss if done carefully.

- Retrieval-Augmented Generation (RAG): Adding trusted documents to help the model give better answers.

What are important LLM milestones?

What can LLMs do?

LLMs can help in many areas:

Modern LLMs can also work with images, audio, and tools (like calling APIs or searching the web). Note: these multimodal abilities are currently available only in certain models (e.g., GPT-4o, Gemini 2.x, and Llama 4).

What are the challenges and risks of LLMs?

Even though LLMs are powerful, they’re not perfect:

- Hallucinations: They sometimes make up facts or give wrong information.[4]

- Bias: Since they learn from internet data, they can reflect unfair opinions or stereotypes.[5]

- Cost and Energy: Training and using these models takes a lot of electricity and money.

- Security: LLMs can be tricked into revealing private information or used in harmful ways.[6]

- Intellectual Property: Training or generating on copyrighted material raises legal questions.

How LLMs are made safer

To make LLMs safer and more helpful, developers use several methods:

Rules and regulations

Governments and companies are starting to create rules for LLMs:

- The EU AI Act (2024) requires companies to explain how their AI works and check it for risks.[7]

- In the US, policies such as the October 2023 Executive Order on Safe, Secure, and Trustworthy AI aim to ensure AI answers are truthful and fair (federal legislation is still evolving).[8]

- Globally, discussions are happening about how to monitor and label AI-generated content.

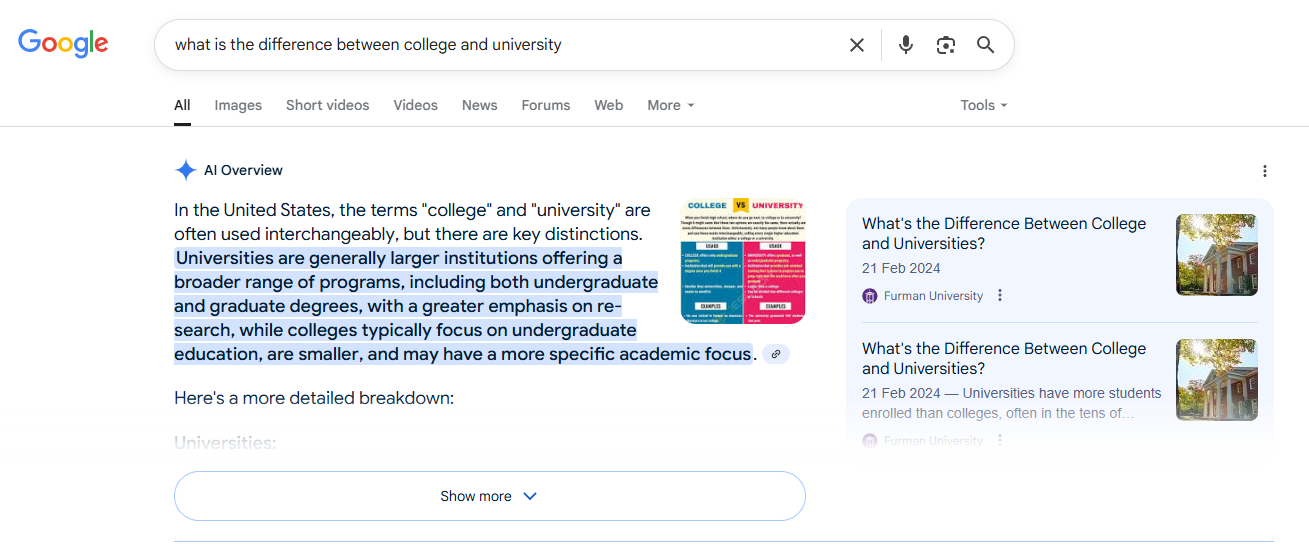

LLMs and SEO (Search Engine Optimization)

Search engines like Google now show AI-generated answers at the top of search results. This changes how people find information online.

Bing, Perplexity, and other engines provide similar generative snippets.

This means that businesses need to update their SEO strategies to include LLM SEO (also called Generative Engine Optimization), which focuses on:

- Making content clear and easy to understand.

- Using structured data (like FAQs or product info).

- Getting mentioned in trusted sources (to increase visibility in AI-generated answers).

Learn more: Answer Engine Optimization

What’s next for LLMs?

Researchers are working on new improvements:

- Smarter and more efficient models that use less energy

- Longer memory, so the model can work with entire books or big reports

- AI agents that can plan tasks and take actions

- Sustainability innovations in chip design, liquid cooling, and algorithmic efficiency to curb energy use.[3]

- Better tracking tools to prove where information comes from and keep AI outputs safe

References

- https://arxiv.org/abs/1706.03762

- https://arxiv.org/abs/2001.08361

- https://www.reuters.com/commentary/breakingviews/ai-boom-is-infrastructure-masquerading-software-2025-07-23/

- https://arxiv.org/abs/2401.11817

- https://arxiv.org/html/2411.10915v1

- https://www.theverge.com/ai-artificial-intelligence/711975/a-new-study-just-upended-ai-safety

- https://digital-strategy.ec.europa.eu/en/policies/regulatory-framework-ai

- https://nypost.com/2025/07/23/us-news/trump-targets-woke-ai-in-series-of-executive-orders-on-artificial-intelligence/

Related links

- https://developers.google.com/machine-learning/resources/intro-llms

- https://www.techtarget.com/whatis/feature/12-of-the-best-large-language-models