David McSweeney is our Chief Editor here at Seobility. He wrote and edited most of the recent guides and articles on our blog. The following article is his assessment of the state of Google Core Updates.

Google dropped an early Christmas present on the SEO community earlier this month, with their first (official) Core algorithm update in over 7 months.

And it’s safe to say, it was one of the biggest SERP shake-ups seen in recent times.

So what was this update all about? And has it actually improved the search results?

Well, before we delve into observations, conjecture, and perhaps a sprinkling of conspiracy, let’s start with the facts.

Table of Contents

Google’s December Core Update: A Quick Timeline

Most SEOs (myself included) weren’t expecting another Google Core update this year.

We had been anticipating one hitting around September/October. But when that didn’t arrive, we figured Google wouldn’t roll anything out before January. It was a reasonable assumption, as normally they don’t rock the boat too much in the run up to the holidays.

We were dead wrong.

Because on the third of December, we got a couple of hours notice with a tweet, then it was time to ride the Core update rollercoaster.

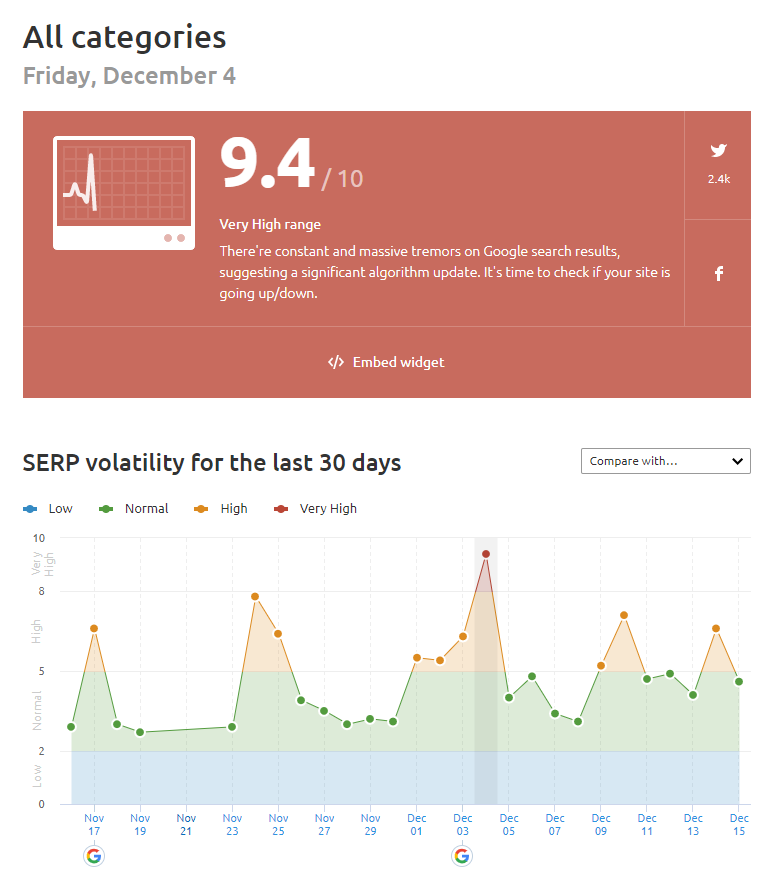

The Semrush sensor (which tracks SERP volatility) started spiking on the evening of the third, and kept rising all the way up to a whopping 9.4 on the fourth.

And for those hit by the update, the impact was felt immediately.

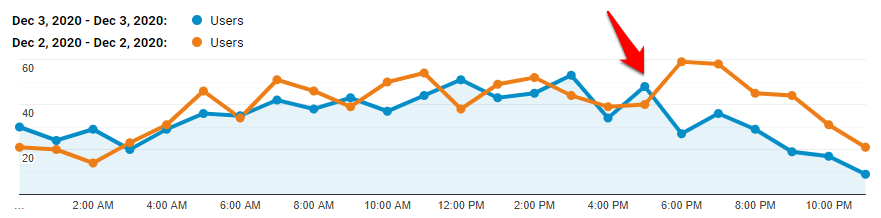

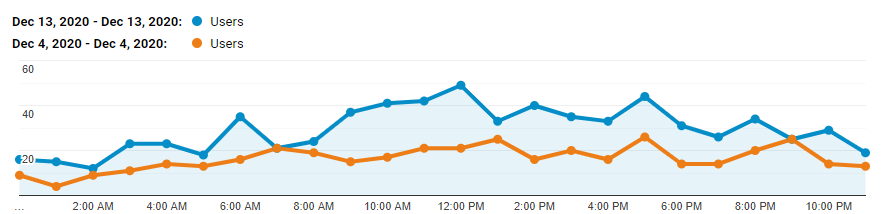

You can see the sudden divergence in hourly search traffic on this chart from Google analytics (3rd December vs 2nd December).

In case you didn’t guess by the big red arrow, the Core update hits at around 5pm (PST).

That particular site lost around 60% of its search traffic overnight. Ouch.

Naturally those who were hit with the update were in a panic.

There’s no good time to lose search traffic. But for anyone involved in the retail sector (and let’s face it, most sites are to some extent) getting crushed at the start of the Christmas shopping season is quite literally devastating.

And we (us wise old SEOs again) figured that those who had lost traffic would need to sit tight, work on improving their sites, and wait for the next Core update for reassessment/recovery. We expected that — usual small fluctuations notwithstanding — it would be a few months before any big shake up in the SERPs again.

We anticipated that those hit would stay hit until the next Core update. Frozen in time if you will.

And once again we were completely wrong.

Because on the 10th of December, Google cranked the dial again.

And while it wasn’t quite as big an update as the 3rd, many sites saw a reversal in fortunes.

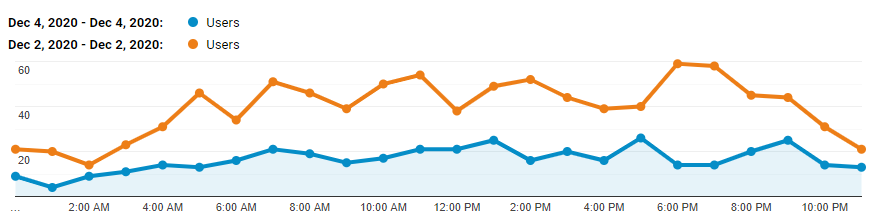

The site in the analytics screenshots above for example regained most of its traffic (Dec 13th vs Dec 4th).

And SEO forums such as Webmaster World were full of stories from those who had their initial gains of the 3rd completely reversed on the 10th.

Google giveth, Google taketh away.

Other sites stayed the same (they remained winners or losers).

And many weren’t impacted by either update and probably wondered what all the fuss was about.

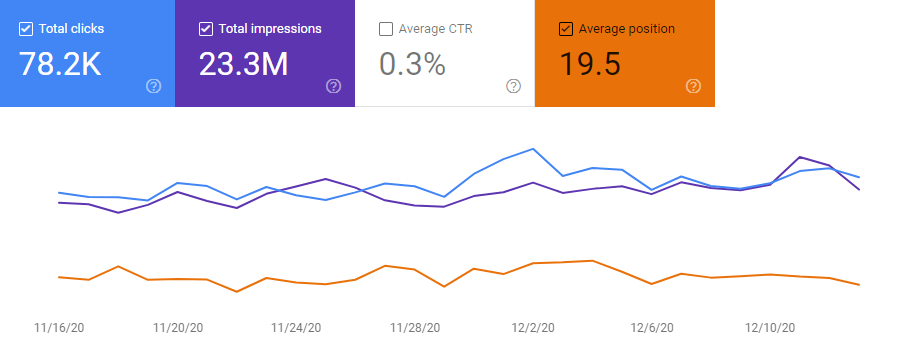

Like this retail directory site, whose Search Console Performance chart shows a relatively stable line (or lines) throughout the whole period.

Update? What update?

On the 16th December Google announced that the roll out of their latest core update was complete.

And like the Grand Old Juke of York’s men, if you’re up you’re up, and if you’re down you’re down. At least until the next Core update.

Update: there’s increasing chatter of a further SERP shake up today. So it seems the update might not be quite over yet!

Which means we can now take a look at the search results and make some assessments.

So let’s move on and ask a question. Or two questions if you want to be pedantic…

What is Google trying to achieve with their Core updates? And are they succeeding?

Google has a stock response for questions related to Core updates.

They’ll point you to this 2019 blog post by Danny Sullivan.

To save you a few minutes, we can summarize the 1,632 words into:

- Create quality content (or improve your existing content)

- Build your (and your site’s) credibility

Point 2 there is what’s generally referred to as E-A-T (expertise, authority, trust).

And we just published a huge guide to how to do that here.

So Google wants to rank high quality content from trusted sites.

Cool. All good so far.

And we definitely recommend you follow that advice.

But the problem is, if you’re someone like me who spends hours (days?) clicking through search results and digging under the hood, you’ll find example after example of sites ranking for highly competitive keywords which tick neither box.

Now I’m not trying to out specific sites in this post. All’s fair in love, war, and SEO.

(Although it does present a wider problem, which I’ll get to)

But here are some blatant examples of where Google’s quality and trust objective is falling apart.

1. Repurposed domains continue to coin it in

It’s no big secret.

Repurposed, high authority aged domains continue to rank fast, and rank high, even if the domain had no previous connection to the current niche.

In case you don’t know, a repurposed domain is a new site built on an old, expired domain, which has a strong, aged backlink profile.

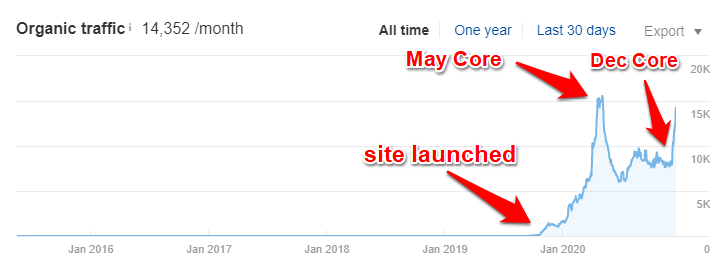

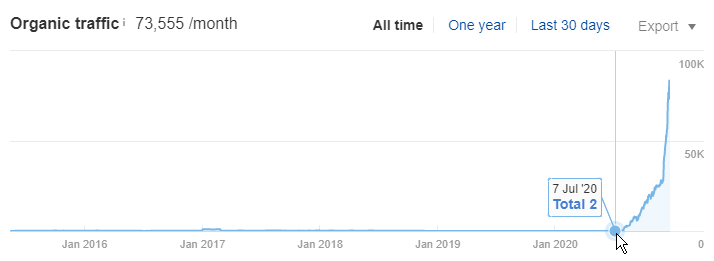

Here’s an example in the sports niche.

To summarize, the site above was:

- launched in September 2019

- quickly grew to 15k organics p/m* (making it one of the biggest sites in this lucrative niche)

- took a bit of a dip with the May 2020 Core update

- came roaring back after the December Core update

*traffic estimates from tools are exactly that, estimates, and should be taken with a grain of salt. However, I happen to know the actual volumes/CTRs in this particular niche – and I expect actual organic traffic is closer to 50k/month.

So why is it ranking? And why did it benefit from the December Core update?

Well, here’s what the site doesn’t have:

- An about us page

- Any contact info

- Any author info

- A privacy policy

And here’s what it does have:

- A ton of powerful links picked up between 2005 and 2011 when the domain was home to a European cultural organization

- Lots of low quality (but reasonably lengthy) keyword focused articles

To be clear, none of the links are in any way relevant to the current niche/site.

And for extra lolz, a quick look at the domain on archive.org shows that it also spent a couple of years (2017-2018) happily living as a PBN site for a law firm.

It’s been around the block.

The takeaways:

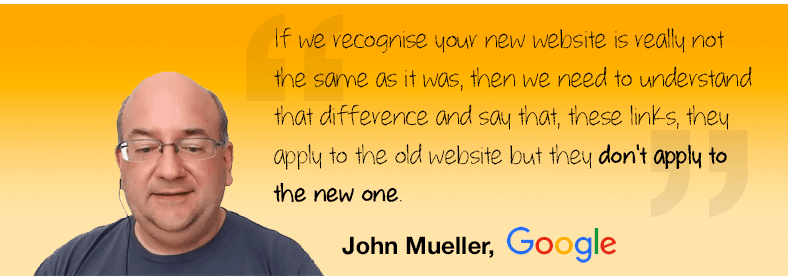

- Google still overly weights links in their algorithm, particularly when it comes to measuring trust.

- Despite what they might say publicly (see John Mueller’s quote below) there’s still no reset for links when an old domain is repurposed.

- And authority trumps relevance when it comes to links.

Are you sure about that John?

Update 22nd December:

Matt Diggity, from our friends over at Diggity Marketing, sent over this example of a repurposed domain in the health niche, which Google has taken a particular shine to.

If you think of the health niche that generates the most spam (and Google should be particularly sensitive about) you can probably guess what subniche it’s in.

And according to Matt, E-A-T is non-existent. It’s “all links”.

Google seems to be rewarding links. Especially high authority links. Repurposed domains are ranking left and right and they have nothing going for them except their links.

2. Google’s still got a big problem with cloaked redirects

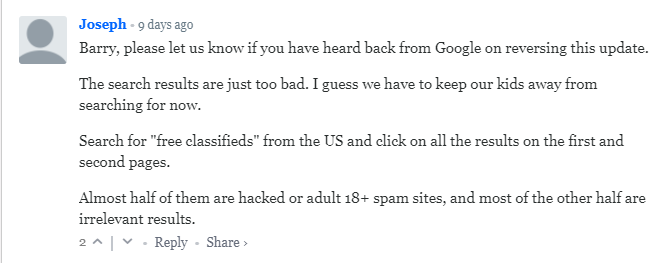

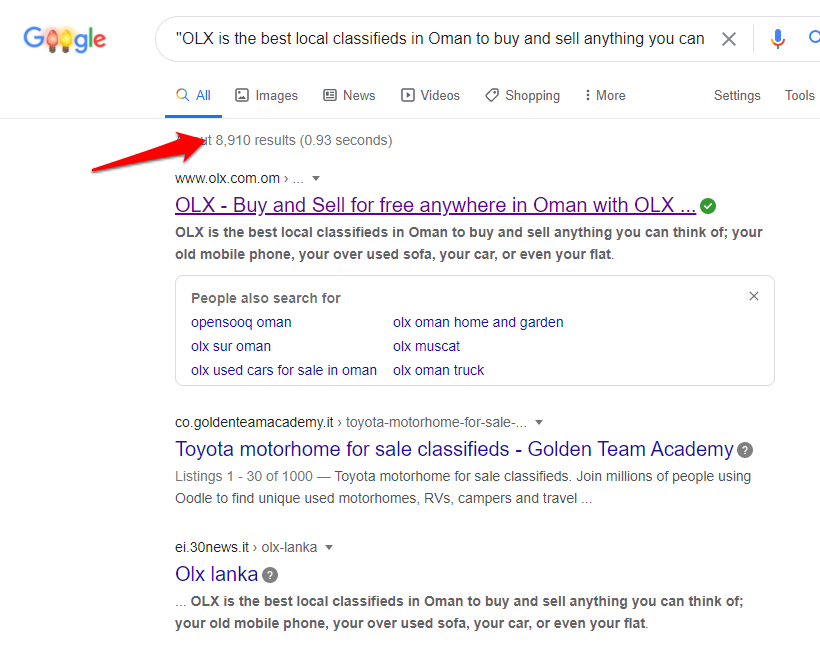

If you read the comments on Barry Schwartz’ post which covered the initial 4th December roll out, you might have seen a guy called Joseph ranting about the search results for “free classifieds”.

And the thing is… he’s completely right.

Here’s a screen recording showing what happens when you click on the result at position 9.

You can’t see it on the recording, but suffice to say, my anti-virus went nuts.

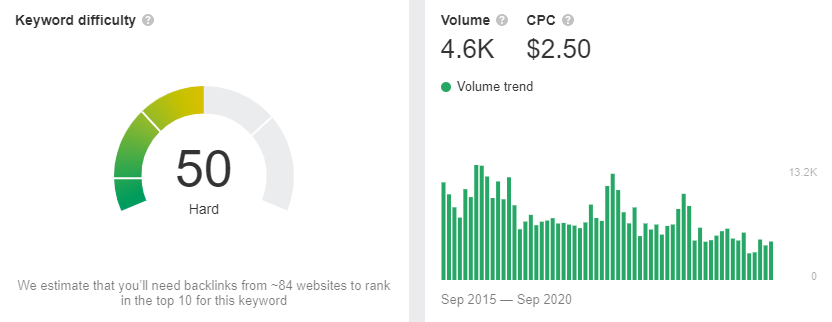

And “free classifieds” is not exactly a low competition keyword. It has a search volume of 4.6K, a difficulty score of 50, and a $2.50 CPC.

So it’s safe to assume that plenty of people are going to be clicking on that result. Some of them might even end up with a virus on their machine.

YMYL Google?

This isn’t a new problem. But it certainly hasn’t gone away with the latest Core update. In my post-update SERP digging I came across spammy redirects on numerous search results.

In case you’re not familiar with what’s going on here, the website is showing Google different content to what a user will actually see when they hit the page. It’s a technique called cloaking, has been around for as long as Google has, and really should have been dealt with a long time ago.

Needless to say, it’s a clear violation of Google’s webmaster guidelines.

Cloaking refers to the practice of presenting different content or URLs to human users and search engines. Cloaking is considered a violation of Google’s Webmaster Guidelines because it provides our users with different results than they expected.

But it also highlights a wider issue with Google’s assessment of content quality.

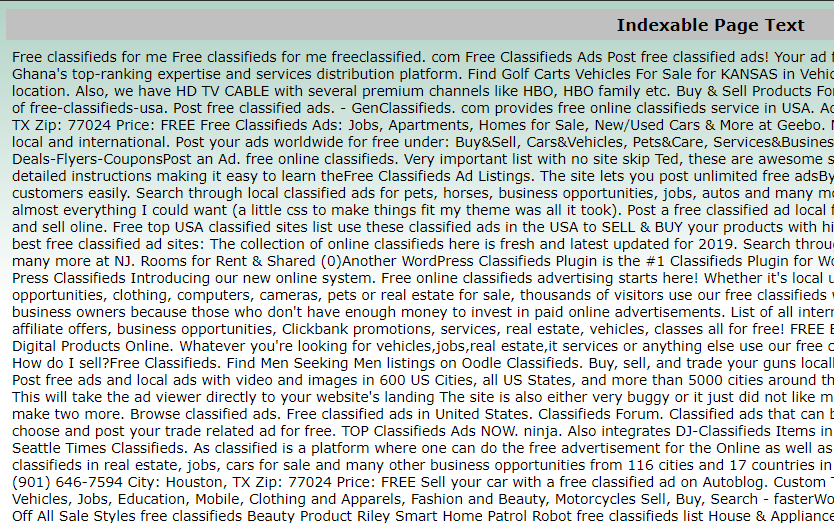

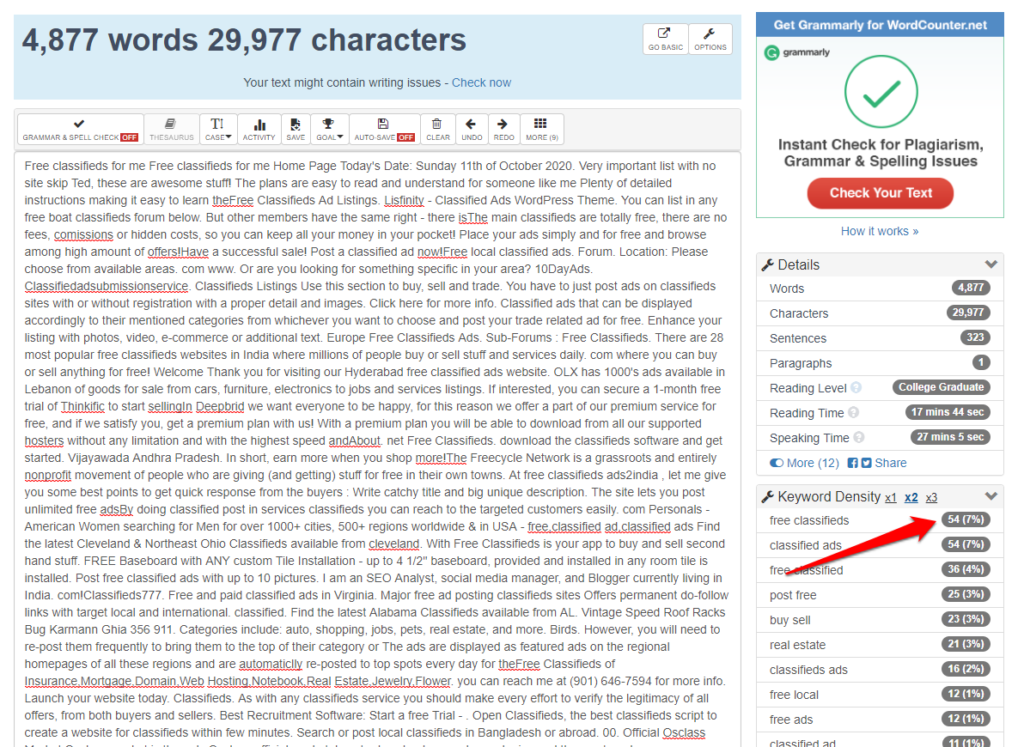

Because we can use this tool to find out what Googlebot actually sees when it crawls the page.

Yep, a load of scraped, gobbledygook text.

Almost 5,000 words of scraped, gobbledygook text to be specific…

…with the phrase “free classifieds” repeated 54 times for a keyword density of 7%.

I can’t quite believe I’m talking about keyword density in 2020, but we are where we are.

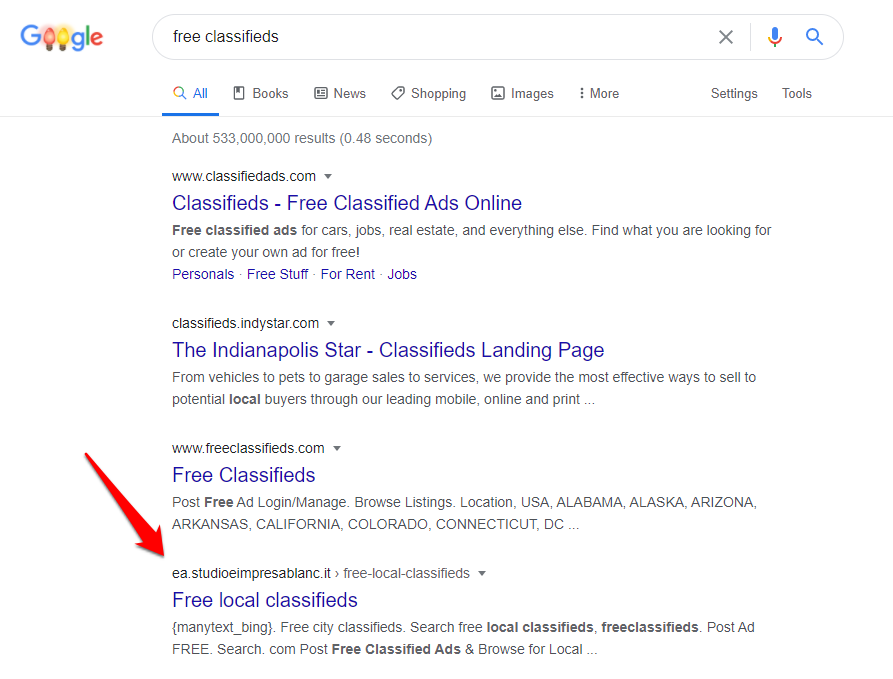

If I pick a random sentence from the scraped content I can find the original source.

Which also reveals that there are literally thousands of these scraper sites using exactly the same text, and they’re all indexed by Google.

The takeaways

- Google still hasn’t figured out how to identify cloaked URLs, at least not in a timely manner before they’re indexed and ranked

- Scraped text, combined from multiple sources can (and does) rank

- Google’s not as advanced at figuring out what is and isn’t quality content as they would like us to believe

- Keyword density is probably still a thing (ugh)

Note: the page in the screen recording was ranking for at least three days, but now appears to be gone. However, it’s since been replaced by a new cloaked page, which is ranking even higher.

It’s SERP whack-a-mole.

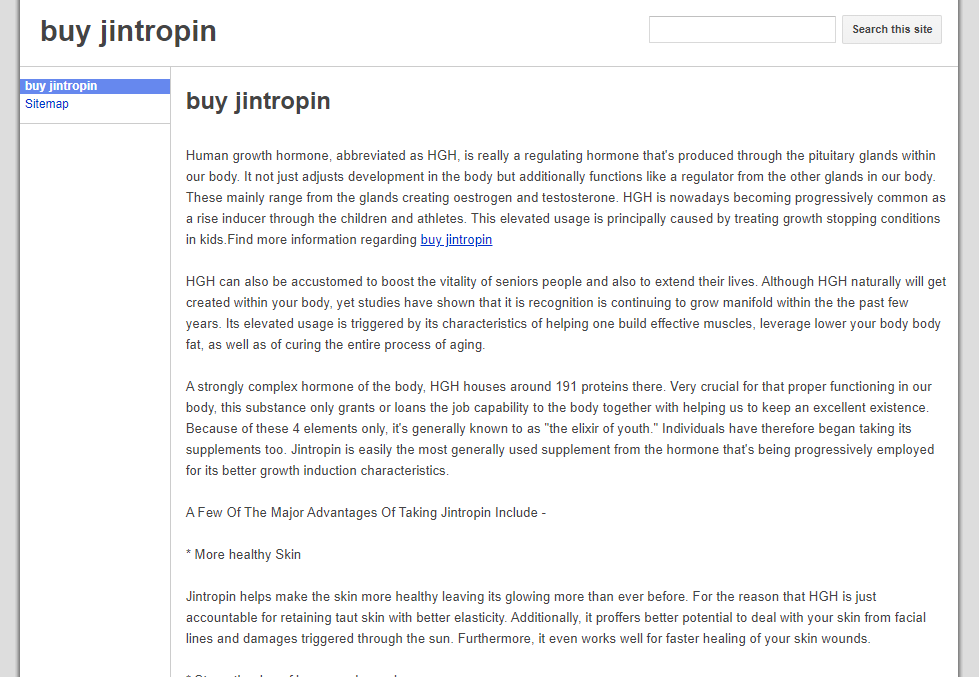

3. Can’t rank for a YMYL keyword? Throw up a doorway page on a Google site

You might have heard of parasite SEO before. It involves setting up a page (sometimes legitimately, sometimes not so legitimately) on a high authority domain, and ranking that single page for a competitive keyword based on the host domain’s strength.

Normally the page will be set up as a doorway page, which exists simply to link back to a money site.

Google doesn’t like doorway pages.

Doorways are sites or pages created to rank highly for specific search queries. They are bad for users because they can lead to multiple similar pages in user search results, where each result ends up taking the user to essentially the same destination. They can also lead users to intermediate pages that are not as useful as the final destination.

But unfortunately…

…it happens to host a LOT of them.

In fact, I checked the organic keywords for “sites.google.com/site/” and discovered that the subdomain currently ranks on page one for over 13,000 “buy” keywords.

Now some of these might be legitimate sites.

But a big percentage of them look like this….

Which at the time of writing (after the Core update has fully rolled out) is still sitting pretty at #1 for its target keyword.

A keyword which gets 100 US searches a month and is very much YMYL.

That’s just one example. But there are THOUSANDS of similar doorway pages hosted on single page Google sites. They rank for everything from cheap flights, to credit card offers, to imported cigarettes, and every pill and potion under the sun.

And of course, it’s not just Google sites. There are plenty of other domains (unwittingly) hosting similar doorway pages.

Let’s not get started on Pinterest…

The takeaway

Doorway pages on high authority parent domains still rank for many competitive (often YMYL) terms

Overall takeaway: Google’s going for the squirrels and completely disregarding the elephants

I highlighted the three tactics above — repurposed domains, cloaked pages, doorway pages — because they’re clear and obvious. They should be easy for a search engine as advanced as Google to spot and filter out.

They’re the elephants in the room, which Google seems to be either incapable, or unwilling to deal with.

Meanwhile, the squirrels — who may be a little mischievous, but generally play by the rules — get punished.

And that’s a big problem..

Here’s why.

Why Google’s Core updates encourage more spam, and less investment in quality content

I’m going to kick off this section by quoting a post from Webmaster World. Because I really can’t put it any better myself.

“At least before you knew that provided you didn’t break Google’s webmaster guidelines and genuinely produced useful content, you were never going to be affected by an update reducing your traffic by -40%-80% just like that.

That stuff was supposed to be reserved for the spammers, scrappers and link scheme guys.

Now all these people outrank you with their expired domain 301 redirect and Fiverr articles just because of their link authority.

Now you suddenly ask why you shouldn’t start to spam yourself. Sure, you will get penalized eventually but so will you with your “white hat” site anyway at one point, and making a spam site is so much cheaper and simpler than a legit one. Why not just start making 10?

And now the web is a poorer place because you can’t find genuinely expert content anymore in narrow fields that aren’t covered by the big brands and mainstream sites. And full of even more spammers.”

Nail. On. Head.

Because it’s not just the fact that spam continues to rank that’s the problem. The spam has always been there.

It’s the fact that even if you’re playing by the rules, you might still take a big hit in a Core update.

Can’t happen to you? Well…

You might not be alright Jack

There are plenty of SEOs that will tell you that they don’t worry about Google Core updates as they’re doing nothing wrong. In fact, I used to be one of them.

But they should.

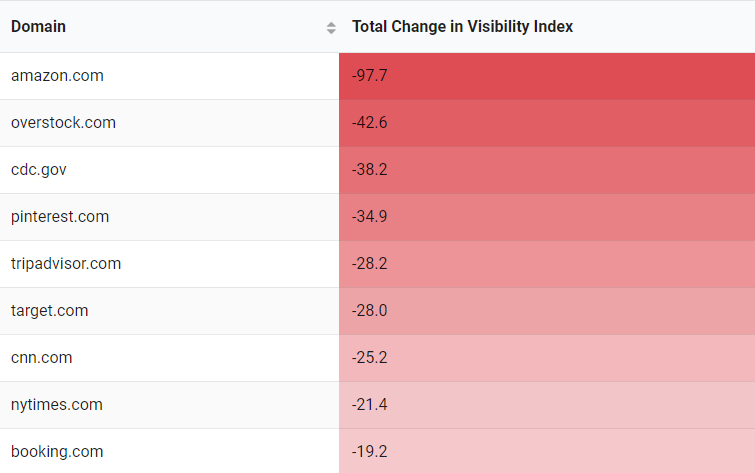

Because if the New York Times can lose a whack of search visibility overnight, then so can anyone.

Note: this doesn’t imply that the New York Times lost 21.4% of their traffic in the update (it’s a bit more complex than that), but it’s reasonable to assume they took a decent sized hit.

It’s hard to argue that content quality and trust is the issue when we’re talking about The New York Times.

And whatsmore…

What’s good today might not be good tomorrow (but might be fine again in three months time)

I’ve been involved in SEO for over 20 years. I’ve been there in the trenches through every major update. I danced the Google dance back in the late 90s. I remember when “Florida” hit in 2003 — it was a clear improvement.

Penguin made sense. Panda made sense. Hummingbird made a lot of sense.

Medic kind of made sense.

But ever since, there’s been a seeming randomness to Google’s Core updates.

I’ve seen plenty of sites that were crushed in one, changed nothing, recovered completely, were hit in the next one, recovered again…

We’re talking about going from the top spots, to nowhere, to back in the top spots again.

Hundreds of articles have been written about how to recover from Core updates. I would wager that “do nothing” and wait is not advice you’ll see written in many of them.

Now I should point out here that sometimes (perhaps most of the time), it’s clear what’s up. There’s an obvious issue with E-A-T or content quality that can be addressed. Or there’s a technical issue holding the site back.

But other times, there really is no logical reason for a hit. Which chimes with this horrible paragraph from Google’s Core update advice post:

We know those with sites that experience drops will be looking for a fix, and we want to ensure they don’t try to fix the wrong things. Moreover, there might not be anything to fix at all.

“There’s nothing you can do about it” isn’t what you want to hear when you’ve just lost 90% of your revenue overnight.

You didn’t do anything wrong, but you’re not going to eat tomorrow. Wait three months. Soz.

With great power comes great responsibility

(apologies for the cliché)

Let me dial back a little at this stage and say that Google has an incredibly tough job.

According to this post they successfully catch 25 billion newly discovered spam pages every single day.

That’s a mind blowing amount of content. The equivalent of every man, woman, and child on Earth churning out 3+ pages of spam every 24 hours.

And I’ve no reason to doubt their claim (in the same post) that 99% of visits from Google search results lead to spam free experiences.

It’s also fair to say that Google can’t please everyone. In any niche there’s going to be multiple sites competing for the top spots. They all want to be number 1, but by definition, only one of them can be.

Ups and downs in search traffic are to be expected. If a competitor is working harder than you — creating better content, getting better reviews, earning better links — they should be rewarded. And vice versa.

But while a dip in traffic can be weathered, a sudden and total loss of visibility (for which there is no obvious cause) cannot.

Google has the power to close a business down with the flick of a switch. And while the percentage of “good” sites that get incorrectly lumped in with the “bad” may be small, that’s no consolation if one of those false positives happens to be your business.

Danny Sullivan, Google’s public search liaison, is a great guy. He deals with a lot of heat from webmasters with good grace, patience and humour. But I took issue with this tweet.

Business owners would like to succeed. With search, that typically means getting visitors that they hope to convert in some way, at no or low price and effort, especially since they have businesses to run. Or am I off the mark here?….

— Danny Sullivan (@dannysullivan) December 8, 2020

Because for a business doing things the right way search traffic is certainly not free.

While that final click may be, it’s the result of previous investment — be that in money, or time — in creating the kind of quality content that Google wishes to surface in its search results.

It’s the result of hard work building relationships, getting mentioned on other sites in your niche or in the media, providing great customer service, creating a great product.

Or it’s the result of buying an aged expired domain with strong (but unrelated) links, and throwing up some cheap content from Fiverr.

The incentive and motivation to invest in high quality content decreases when there’s a risk (even if that risk is small) that you could lose it all overnight. And the temptation to gamble with spam tactics (with considerably lower capital investment risk) becomes more compelling — particularly when those tactics can be seen to be working for competitors.

25 billion spam pages a day becomes 50 billion, 100 billion, a googol…

And that’s not a good place for the web to be.

In conclusion (and our advice if you’ve been hit by a Core update)

Google’s Core updates are designed to improve search quality.

They may be generally succeeding (although that’s open to debate).

But there are still some HUGE loopholes that spammers are taking advantage of to rank. And the latest Core update doesn’t seem to have improved Google’s ability to detect and filter out these particular tactics.

- Repurposed domains are still riding high

- Cloaked redirects still litter the SERPs (and can be dangerous for users)

- Doorway pages (or parasite pages) rank for numerous YMYL queries

On top of that (at least in my opinion) Google’s Core updates can be too punitive on “white hat” sites which have not violated Google’s guidelines. Fluctuations for these sites should be expected, but not complete loss of visibility.

With this being said, I remain optimistic that Google genuinely wishes to improve its search results, and has no desire to penalize sites who are playing by the rules, creating great content, and providing a good experience for their users.

I believe we’re looking at collateral damage from Google’s fight against spam. False positives perhaps, which would account for the ups and downs between Core updates when nothing changes in the interim.

But false positives or otherwise, these big drops for “white hat” sites are devastating. And even if it’s only happening to a small (but understandably vocal) minority, they deserve to be listened to.

To put the scale into context, if one in a million web pages (0.0001%) are incorrectly flagged as low quality in a Core update, that’s still six hundred thousand web pages. That’s a lot of collateral damage

Finally, if you’ve been hit by the recent Google Core update, our advice for now is to follow Google’s advice:

- Take an objective look at your content, and consider how it could be improved. Are you completely satisfying the search intent for your target keywords?

- Conduct a full SEO audit and ensure there are no technical issues holding your site back

- Work on your on-site E-A-T signals (be clear about who you are, your expertise, why users should trust you)

- Work on your site speed, and make sure your Core Web Vitals are up to scratch

- Work on earning high quality backlinks to boost your site’s authority

If you have any questions, feel free to drop us a comment below.

And hop on our mailing list for a slate of in-depth SEO tutorials and case studies coming your way in 2021.

PS: Get blog updates straight to your inbox!

39 thoughts on “December Core Update Analysis: Sorry Google, You Have a Spam Problem [Opinion]”

Amazing insights. Yes, I think that most web pages have suffered these radical changes. Google updates favorites only big websites to rank better.

Thanks Nardi. Hopefully things will level up a bit over time.

Great insight in the article, you are right about with great power, comes great responsibility. Google should take care of these activities. Thanks for this valuable post.

thanks Beghama!

Sometimes Google can be a weird beast evolving backwards to add new tricks that slip up (or itself up).

I think the beast has been biting itself a lot recently

[…] This analysis of the core update on seobility pretty much sums up how a lot of SEOs are feeling. […]

You nailed it with this article. I lost roughly 75% of my traffic overnight with the December update. The worst part is my site had just reached new heights and was continuing to climb. I don’t understand how I could be doing everything right one day, to doing most things wrong the next day. My website was/is the epitome of a white hat site. In fact I never got involved in the whole backlink building nonsense because I really didn’t want to go down that rabbit hole. I focused on writing long, in-depth articles. After all, quality content is king right? All of my articles are over 3,000 words with many in the range of 4 – 5,000 words. The site is a little over 2 years old and has steadily grown in organic traffic since launch. The week before the update I finally cracked 2,000 organic visits in a day and was expecting continued growth. Today I’m averaging about 370 organic visits per day, 400 on a good day. With all of that lost traffic came lost revenue. In fact I went from earning a full-time income, to oh crap, I have to look for a job now. Disheartening to say the least.

Hi Derek, sorry to hear about your drop.

Since it seems to me Google are overweighting link authority (hence the repurposed domains etc doing so well) it sounds like you may have been overtaken by sites with stronger link profiles. And when I say “stronger” I don’t mean quality, as Google seems to be struggling to differentiate quality from quantity at the moment.

I wouldn’t go ripping the house down though, as it may be you’ll recover in the next core update. But I’d suggest running a full technical audit just to make sure everything is up to scratch, then perhaps doing some outreach for some of your better content.

I’m guessing you might not want to share your site here, but if you did I’d be happy to take a quick look and see if I can spot anything obvious. Although as Google themselves say, there’s often “nothing to fix”.

Great insight in the article, you are right about with great power, comes great responsibility. Google should take care of these activities. Thanks for this valuable post.

Thanks Harsh, glad you found the post helpful.

I no longer appear in the most read news about 16.12.2020. Also, the news came with a search for “domain name” on page 26. (Note: 16% 404 / %8 301). Can you see a very obvious error on my website? You can add it to your portfolio to increase your research resources. I don’t understand why my website is losing ranking.. thank you

Hi Ahmet, can’t really comment on specific cases (and not actually sure which site you mean as you seem to have posted this comment twice). Would advise you read our guide on E-A-T and also run a full SEO audit on your site to look for any technical issues.

Google generally says there’s nothing the people need to do. What I can suggest is to look at the lost keywords on your domain and to analyse them. 1) Should I really be ranking for this? 2) Is the content matching what the user is expecting (matching search intention) 3) Is the content as good as the competitors? We have a tutorial on analysing keyword changes

Generally, taking a critical look at your content (and making sure you match search intent) is good advice and something you should do. But that’s not the reason for a lot of the drops (and for the spam that’s ranking in many SERPs that shouldn’t be).

I have a distinct feeling that Google’s moved heavily towards a machine learning dependency. As such their results will come off a very varied but low base. This gives everyone terrible results to start with… sure a site may see a recovery in time – if they have enough branded searches to counteract this at the onset.

Great piece, thanks for spending the time (which is money Google) to put it together.

I think that’s a reasonable assumption Robert. An interesting question would be what primary goal has the AI been set (optimize for revenue, or optimize for search quality). This may seem overly simplistic (and it is), but ultimately one has to take priority over the other.

Fantastic write-ups, David, you dare to say things that many SEO experts do not: the current reality in google search. One of my competitors in our niche is a huge, authoritative brand with a bunches of wiki, national news sites baclinks. 80% of their traffic comes from direct and social traffic , that’s because they are no.1 in this niche. Google sent them a Santa Claus update in December, and now their 5 millions ,organic traffic took a nose dive to the bottom with no sign of recovery soon. That makes our lost of 20% traffic this update suddenly seems bearable 😀

Thanks. Obviously I can’t make a call on a specific case (without knowing the site and digging under the hood), but a lot of the yo-yoing makes zero sense.

I wholeheartedly agree with everything in this great article.

Unfortunately, in this day and age the volatility is insane. The drops in traffic we see being reported after every core update would only be caused by server outages 10 years ago. Google has normalized disasters.

On another issue commented on above me (The great authority site push)

Through some of my niches I have lost up to 50% of traffic to low quality big brand authority site pages which rank simply because of their overall site strength.

More and more in my personal searches I will get a result from a big brand that reads as generic as humanly possible just to take advantage of their authority ranking #1. While the smaller sites that actually thoroughly answered the question are gone. I then have to go to duckduckgo to retype my search.

I realize after the “holocaust doesent exist” results debacles they had to dial authority way up to not see these results, but I feel they went way too far. We’re going from Joe the carpenter talking about his experiences and ideas on the best hammer to a generic business insider article being the #1 result because their authority makes Joe invisible.

Glad you mentioned Business Insider Andy. I almost used them as an example (and another point) in the article.

Playing a “Google a random product niche” drinking game, and taking a shot every time Business Insider shows up on page 1 would be a good way to get drunk real fast.

Great read. Really interesting. I lost my main business in the update before last; just wiped out after ranking consistently on the first page, albeit with minor fluctuations for many years. We no longer rank for our brand name, which I spent 10 years building up a great reputation for. I reached out to Danny Sullivan on Twitter for some direction and he initially responded but then went quiet – I assume he had no answer. There is no logical reason for us not to rank for our brand name. Then, in this most recent update, the same happened to my other business. Completely white hat, quality content, ranking very strongly for two years to the point Google would often feature two of our pages on page one for a single query, and then dumped down on page 7 and beyond for all main keywords. Of course, there are always areas of a site that can be improved and should evolve with the latest standards, and one expects to drop a few spots if you don’t. But to go from one of the leading sites to being outranked by spammy, poorly written sites is a tough pill to swallow. It has also meant a 75% drop in income.

Thanks Peter. Really sorry to hear about the issues you’ve been having. Out of interest what niches (no need to be specific, just broadly) were these sites in?

Spot. On. Buddy. Great write up.

Google is not making any sense.

The big one is like you mentioned, update comes and you almost lose your business, then new core update comes and fixes it all, then it is taken away again in a future update. All within 2 years.

I am in such a small niche that it is surprising Google can’t figure out if we are the best result or the 20th result. We are either top 2 or lose 20 or positions during an update.

Google should look for some consistency.

And Danny should know better, nothing is free. Google is the one benefiting the most from webmasters. He needs to come back to earth.

I guess consensus is to get more links, quality links don’t seem to be huge factor.

Thanks again for the write up, it was helpful.

SE Round Table seems to be an example of a site that recovered by doing nothing (or at least just continuing as he had been previously):

https://www.seroundtable.com/hit-google-december-2020-core-update-30619.html

It’s to be expected that as Google tweaks the weighting of some specific factor there will be fluctuations. But the wild swings up and down between updates (observed on many sites) do not seem logical.

Our client’s Media News Outlet website ranking dropped after the update. I expected improvement after rolling out but stats still the same, even dropping day by day..

sorry to hear that Viki. Hope the next update is kinder for you, and in the mean time all you can do is focus on making the best site you can for your users.

Thanks for the great article.

With reference to your #2 point about Cloaking, we are seeing a ton of these search results related to “dating” and “chat” related key words. It makes sense because the advertisements users are redirected to are related to those niches/demographics (somewhat).

Anyways, i opened a thread on the Google Search Console community forums a little over a week ago: https://support.google.com/webmasters/thread/87322852?hl=en

Really didn’t know this was as widespread as it is. Thanks for the thorough explanation of what cloaking is.

These spam webpages are everywhere and ranking quite high. Some as high as #1 for certain keywords. I’ve seen much higher quality websites sitting much lower than this garbage. Sad to see and hope Google does something about it.

Cheers.

Yes, it’s everywhere. But worryingly, Google doesn’t seem to think it’s a problem.

Cloaked urls are becoming a bigger and bigger problem. Im seeing it in the chat niche as well. “Teen Chat” has 3 in the top 20 some redirect to NSFW domains. I hope they figure this out before they truly take over.

I checked numerous verticals, and found them everywhere. Loads on shopping related queries. For example, I looked at the 5 top selling toys this year. Searched them all with “buy” in front, and while the first couple of pages were relatively clean (if crowded with amz, ebay, target) page 3 onwards was the wild west.

Great article! it is certainly spam, google has a problem with. However, I think with E-A-T and links being more and more important, the variety of pages, ranking for keywords tends to focus on very big sites or shops and all these niche pages, earning money through affiliate marketing, do not have a chance anymore. And I am not talking about those low quality sites doing this.

I totally understand and agree to the concept of E-A-T, however, just operating a shop of knives should not give you better chances to rank than writing very good content on products and offering through affiliate programs. Also, since bigger sites have it really easy to rank for almost any keyword, they employ hundreds of people to write articles of low quality – and still rank much higher than objective good articles of the same subject from e.g. a SEO company that neither has E-A-T nor a backlink profile compared to a big news site.

The problem I see, is a concentration of bigger sites that use their authority to invest in other areas, populating the web with low quality content. On the other side, smaller companies with lower budgets that begin working in a subject area even with high quality content, will not have a chance to appear for any keyword for years. Just simply because it will take years to build up E-A-T signals and a domain authority, that is comparable to the big sites.

I used to rank on position 1 or 2 for many information pages on very tiny health subjects. My content is unique, written by professionals and objectively better than any health site has been published so far. Thx to the core updates, I am gone now, thx to the one page articles on big health sites, writing on thousands of subjects and their director being a doctor. That´´ s it google? The bigger online companies become bigger and bigger and the quality of content gets lower and lower, great job…

Yes, it’s a separate issue to an extent, but a highly relevant one. Much harder for smaller, specialist sites to grab market share now.

Great article. I learned a lot. Being totally wedded to continually improving my site by doing all the things that Google claims make a difference (EAT, high quality content – much writing by physician experts), zero black hat , etc), i spent a year recovering from YMYL only to get whacked by the December update. It seems a lot of Health search traffic went to a handful of giant encyclopedia type health sites. Is that really what we want? I have stories that have attracted self-built comment communities. People helping people. These stories are helping them in a personal way that the encyclopedias do not. It’s very discouraging to spend a ton of time and money to maintain a high quality site only to get beaten down by spammers and a handful of super well funded Sites. Is that really what users want?

Thanks Pat, and sorry to hear you’ve been hit by the most recent update. From a quick look at your site (admittedly a 2 minute assessment) it seems you’re ticking a lot of boxes that you should be. And the consolidation of traffic to a handful of sites in many niches is actually another, wider topic and issue.

Hi David,

Excellent write-up. I can confirm that repurposed domains are doing great in SERPs. One of my sites took a huge dip (down ~80%), and my competitor with a site built on expired domain is ruling the SERPs. Their content is garbage. No author bio, no about us page, and some articles seem to be generated by some spinning tool.

But they have an excellent backlink profile with links from sites like theguardian.com, Washingtonpost.com, bbc.co.uk, etc.

Thanks Akash. Yes, it’s rife, and a huge blind spot for Google.

Great insight with this article, lots of good nuggets of information. Its interesting on the repurposed domains, I am not seeing a huge improvement through 301 redirections, but I’ve also tried to get more mainstream sites and not ones with thousands of links.

Thanks Don. I’m still seeing 301s working well, and generally it looks like sheer link volume (assuming at least some measure of quality) is the critical metric.